Cilium is an open source, cloud native solution for providing, securing, and observing network connectivity between workloads, fueled by the revolutionary Kernel technology eBPF. It is mainly used for transparently securing the network connectivity between application services deployed using Linux container management platforms like Docker and Kubernetes. All major cloud providers are working to adopt cilium for the managed kubernetes services. Google has implemented new GKE networking model Dataplane V2 using cilium. One of the biggest advantages of Dataplane V2 is that it makes it easier to investigate networking issues on GKE. I recently ran into a networking issue on GKE Autopilot while setting up a JupyterHub instance. This post describes the detailed steps for troubleshooting Cilium on Google Kubernetes Engine.The post takes the JupyterHub deployment issue here as an example for better understanding

Prerequisites

- Basic understanding of Cilium

- Understanding of containerized application architecture

- Understanding of Kubernetes architecture

- Basic understanding of Helm charts

- Experience with GKE (Google Kubernetes Engine)

- Access to Google Cloud Platform

Troubleshooting details

1: Create an instance of GKE Standard cluster

Please keep in mind that Dataplane V2 containers are part of kube-system namespace. You will not be able to troubleshoot Dataplane V2 with GKE Autopilot because Google does not allow to access pods from the system namespace

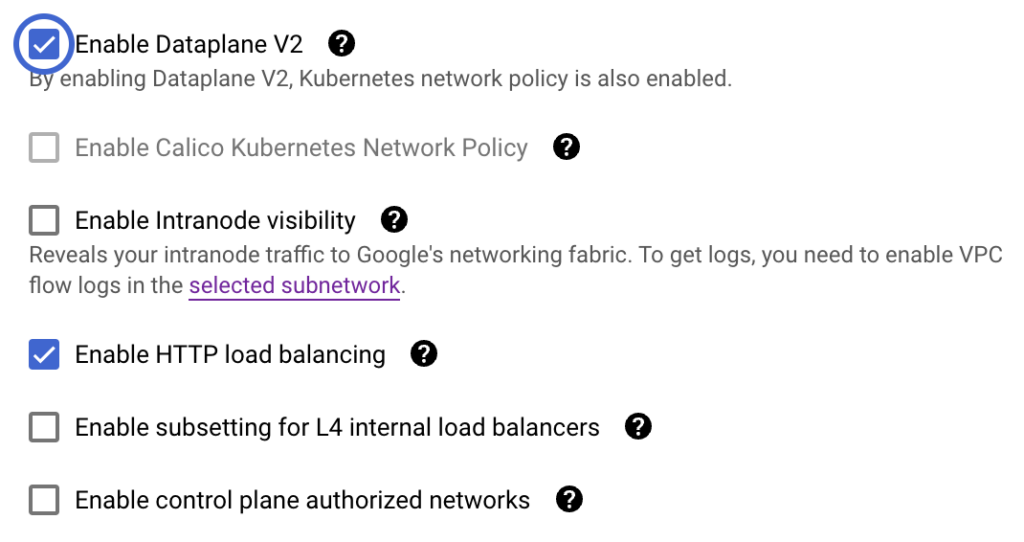

Go to the cluster networking and select ‘Enable Dataplane V2’

2: Check the pods in kube-system namespace

kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

anetd-8w4nl 1/1 Running 0 41m

anetd-pcqvt 1/1 Running 0 41m

anetd-qlvrt 1/1 Running 0 41m

antrea-controller-horizontal-autoscaler-7b69d9bfd7-rrc7w 1/1 Running 0 41m

container-watcher-gzktg 1/1 Running 0 40m

container-watcher-jsxrw 1/1 Running 0 40m

container-watcher-rxxp8 1/1 Running 0 40m

event-exporter-gke-7bf6c99dcb-ms824 2/2 Running 0 42m

fluentbit-gke-2cccd 2/2 Running 0 41m

fluentbit-gke-kd66j 2/2 Running 0 41m

fluentbit-gke-lxtcl 2/2 Running 0 41m

gke-metrics-agent-6sdb4 2/2 Running 0 41m

gke-metrics-agent-ncdbn 2/2 Running 0 41m

gke-metrics-agent-phrl6 2/2 Running 0 41m

konnectivity-agent-85d7bd5497-fqpvc 1/1 Running 0 40m

konnectivity-agent-85d7bd5497-ljwlf 1/1 Running 0 41m

konnectivity-agent-85d7bd5497-xhrvw 1/1 Running 0 40m

konnectivity-agent-autoscaler-5d9dbcc6d8-77nnf 1/1 Running 0 41m

kube-dns-865c4fb86d-bhrc7 4/4 Running 0 42m

kube-dns-865c4fb86d-zgl97 4/4 Running 0 40m

kube-dns-autoscaler-84b8db4dc7-8hb59 1/1 Running 0 42m

l7-default-backend-f77b65cbd-jnbdt 1/1 Running 0 41m

metrics-server-v0.5.2-6bf74b5d5f-t9zsk 2/2 Running 0 39m

netd-4k6w7 1/1 Running 0 40m

netd-79htm 1/1 Running 0 40m

netd-m2ld5 1/1 Running 0 40m

node-local-dns-2qlz9 1/1 Running 0 40m

node-local-dns-lg74b 1/1 Running 0 40m

node-local-dns-xgl4l 1/1 Running 0 40m

pdcsi-node-4kd74 2/2 Running 0 40m

pdcsi-node-swx48 2/2 Running 0 41m

pdcsi-node-vskbz 2/2 Running 0 40mCode language: Shell Session (shell)The pod names starting with anetd are Dataplane V2 pods that has cilium e.g. anetd-8w4nl

3: Get a cilium container shell from one of the anetd pods

kubectl exec -it -n kube-system anetd-8w4nl -- sh -c 'bash'Code language: Shell Session (shell)4: Identify cilium instance details

root@gke-test-cluster-1-default-pool-d0991fd4-c36l:/home/cilium# cilium status

level=info msg="Initializing Google metrics" subsys=metrics

KVStore: Ok Disabled

Kubernetes: Ok 1.27 (v1.27.2-gke.1200) [linux/amd64]

Kubernetes APIs: ["cilium/v2::CiliumLocalRedirectPolicy", "cilium/v2::CiliumNode", "cilium/v2alpha1::CiliumEndpointSlice", "core/v1::Namespace", "core/v1::Node", "core/v1::Pods", "core/v1::Service", "discovery/v1::EndpointSlice", "networking.k8s.io/v1::NetworkPolicy"]

KubeProxyReplacement: Strict [eth0 10.128.0.29 (Direct Routing)]

Host firewall: Disabled

CNI Chaining: generic-veth

Cilium: Ok 1.12.10 (v1.12.10-4893d97756)

NodeMonitor: Listening for events on 2 CPUs with 64x4096 of shared memory

IPAM: IPv4: 0/254 allocated from 10.88.0.0/24,

BandwidthManager: Disabled

Host Routing: Legacy

Masquerading: Disabled

Controller Status: 50/50 healthy

Proxy Status: OK, ip 169.254.4.6, 0 redirects active on ports 10000-20000

Global Identity Range: min 256, max 65535

Hubble: Ok Current/Max Flows: 63/63 (100.00%), Flows/s: 18.55 Metrics: Disabled

Encryption: Disabled

Cluster health: Probe disabled

Code language: Shell Session (shell)5: Find cilium configuration details

root@gke-test-cluster-1-default-pool-d0991fd4-c36l:/home/cilium# cilium config --all

level=info msg="Initializing Google metrics" subsys=metrics

#### Read-only configurations ####

APIRateLimit

ARPPingKernelManaged : false

ARPPingRefreshPeriod : 30000000000

AddressScopeMax : 252

AgentHealthPort : 9879

AgentLabels : []

AgentNotReadyNodeTaintKey : node.cilium.io/agent-not-ready

AllocatorListTimeout : 180000000000

AllowDisableSourceIPValidation : false

AllowICMPFragNeeded : true

AllowIMDSAccessInHostNSOnly : false

AllowLocalhost : always

AnnotateK8sNode : false

AutoCreateCiliumNodeResource : true

BGPAnnounceLBIP : false

BGPAnnouncePodCIDR : false

BGPConfigPath : /var/lib/cilium/bgp/config.yaml

BPFCompileDebug :

BPFMapsDynamicSizeRatio : 0.0025

BPFRoot :

BPFSocketLBHostnsOnly : true

BpfDir : /var/lib/cilium/bpf

BypassIPAvailabilityUponRestore : false

CGroupRoot :

CNIChainingMode : generic-veth

CRDWaitTimeout : 300000000000

CTMapEntriesGlobalAny : 65536

CTMapEntriesGlobalTCP : 131072

CTMapEntriesTimeoutAny : 60000000000

CTMapEntriesTimeoutFIN : 10000000000

CTMapEntriesTimeoutSVCAny : 60000000000

CTMapEntriesTimeoutSVCTCP : 21600000000000

CTMapEntriesTimeoutSVCTCPGrace : 60000000000

CTMapEntriesTimeoutSYN : 60000000000

CTMapEntriesTimeoutTCP : 21600000000000

CertDirectory : /var/run/cilium/certs

CgroupPathMKE :

ClockSource : 1

ClusterHealthPort : 4240

ClusterID : 0

ClusterMeshConfig : /var/lib/cilium/clustermesh/

ClusterMeshHealthPort : 0

ClusterName : default

CompilerFlags : []

ConfigDir : /tmp/cilium/config-map

ConfigFile :

ConntrackGCInterval : 0

CreationTime : 2023-07-27T16:17:00.641956736Z

DNSMaxIPsPerRestoredRule : 1000

DNSPolicyUnloadOnShutdown : false

DNSProxyConcurrencyLimit : 1000

DNSProxyConcurrencyProcessingGracePeriod: 0

DatapathMode : veth

Debug : false

DebugVerbose : []

DeriveMasqIPAddrFromDevice :

DevicePrefixesToExclude : []

Devices : [eth0]

DirectRoutingDevice : eth0

DisableCNPStatusUpdates : true

DisableCiliumEndpointCRD : false

DisableCiliumNetworkPolicyCRD : true

DisableEnvoyVersionCheck : false

DisableIPv6Tunnel : false

DisableIptablesFeederRules : []

DisablePodToRemoteNodeTunneling : false

DisablePolicyEventCountMetric : false

DryMode : false

EgressMasqueradeInterfaces :

EgressMultiHomeIPRuleCompat : false

EnableAutoDirectRouting : false

EnableAutoDirectRoutingIPv4 : false

EnableAutoDirectRoutingIPv6 : false

EnableAutoProtectNodePortRange : true

EnableBBR : false

EnableBGPControlPlane : false

EnableBPFClockProbe : true

EnableBPFMasquerade : false

EnableBPFTProxy : false

EnableBandwidthManager : false

EnableCiliumEndpointSlice : true

EnableCustomCalls : false

EnableEndpointHealthChecking : false

EnableEndpointRoutes : true

EnableEnvoyConfig : false

EnableExternalIPs : true

EnableFQDNNetworkPolicy : false

EnableFlatIPv4 : false

EnableGDCILB : false

EnableGNG : false

EnableGoogleMultiNIC : false

EnableGoogleServiceSteering : false

EnableHealthCheckNodePort : true

EnableHealthChecking : false

EnableHealthDatapath : false

EnableHostFirewall : false

EnableHostIPRestore : true

EnableHostLegacyRouting : true

EnableHostPort : true

EnableHostServicesPeer : true

EnableHostServicesTCP : true

EnableHostServicesUDP : true

EnableHubble : true

EnableHubbleRecorderAPI : true

EnableICMPRules : true

EnableIPMasqAgent : false

EnableIPSec : false

EnableIPv4 : true

EnableIPv4EgressGateway : false

EnableIPv4FragmentsTracking : true

EnableIPv4Masquerade : false

EnableIPv6 : false

EnableIPv6Masquerade : false

EnableIPv6NDP : false

EnableIdentityMark : true

EnableIngressController : false

EnableK8sTerminatingEndpoint : true

EnableL2NeighDiscovery : true

EnableL7Proxy : true

EnableLocalNodeRoute : false

EnableLocalRedirectPolicy : true

EnableMKE : false

EnableMergeCIDRPrefixIPLabels : true

EnableMonitor : true

EnableNodeNetworkPolicyCRD : true

EnableNodePort : true

EnablePMTUDiscovery : false

EnablePolicy : default

EnableRecorder : false

EnableRedirectService : true

EnableRemoteNodeIdentity : true

EnableRuntimeDeviceDetection : false

EnableSCTP : false

EnableSVCSourceRangeCheck : true

EnableServiceTopology : true

EnableSessionAffinity : true

EnableSocketLB : true

EnableStaleCiliumEndpointCleanup : true

EnableTHC : false

EnableTracing : false

EnableTrafficSteering : false

EnableUnreachableRoutes : false

EnableVTEP : false

EnableWellKnownIdentities : false

EnableWireguard : false

EnableWireguardUserspaceFallback : false

EnableXDPPrefilter : false

EnableXTSocketFallback : true

EncryptInterface : []

EncryptNode : false

EndpointGCInterval : 300000000000

EndpointQueueSize : 25

EndpointStatus

EnvoyConfigTimeout : 120000000000

EnvoyLog :

EnvoyLogPath :

EnvoySecretNamespace :

ExcludeLocalAddresses : <nil>

ExternalClusterIP : false

FQDNProxyResponseMaxDelay : 100000000

FQDNRegexCompileLRUSize : 1024

FQDNRejectResponse : refused

FixedIdentityMapping

ForceLocalPolicyEvalAtSource : true

FragmentsMapEntries : 8192

HTTP403Message :

HTTPIdleTimeout : 0

HTTPMaxGRPCTimeout : 0

HTTPNormalizePath : true

HTTPRequestTimeout : 3600

HTTPRetryCount : 3

HTTPRetryTimeout : 0

HostV4Addr :

HostV6Addr :

HubbleEventBufferCapacity : 63

HubbleEventQueueSize : 2048

HubbleExportFileCompress : false

HubbleExportFileMaxBackups : 5

HubbleExportFileMaxSizeMB : 10

HubbleExportFilePath :

HubbleListenAddress :

HubbleMetrics : []

HubbleMetricsServer :

HubbleMonitorEvents : []

HubbleRecorderSinkQueueSize : 1024

HubbleRecorderStoragePath : /var/run/cilium/pcaps

HubbleSocketPath : /var/run/cilium/hubble.sock

HubbleTLSCertFile :

HubbleTLSClientCAFiles : []

HubbleTLSDisabled : false

HubbleTLSKeyFile :

IPAM : kubernetes

IPAllocationTimeout : 120000000000

IPMasqAgentConfigPath : /etc/config/ip-masq-agent

IPSecKeyFile :

IPTablesLockTimeout : 5000000000

IPTablesRandomFully : false

IPv4NativeRoutingCIDR : <nil>

IPv4NodeAddr : auto

IPv4PodSubnets : []

IPv4Range : auto

IPv4ServiceRange : auto

IPv6ClusterAllocCIDR : f00d::/64

IPv6ClusterAllocCIDRBase : f00d::

IPv6MCastDevice :

IPv6NativeRoutingCIDR : <nil>

IPv6NodeAddr : auto

IPv6PodSubnets : []

IPv6Range : auto

IPv6ServiceRange : auto

IdentityAllocationMode : crd

IdentityChangeGracePeriod : 5000000000

IdentityRestoreGracePeriod : 600000000000

InstallEgressGatewayRoutes : false

InstallIptRules : true

InstallNoConntrackIptRules : false

JoinCluster : false

K8sAPIServer : https://10.128.0.28:443

K8sClientBurst : 0

K8sClientQPSLimit : 0

K8sEnableAPIDiscovery : false

K8sEnableK8sEndpointSlice : true

K8sEnableLeasesFallbackDiscovery : false

K8sEventHandover : false

K8sHeartbeatTimeout : 30000000000

K8sInterfaceOnly : false

K8sKubeConfigPath : /var/lib/kubelet/kubeconfig

K8sNamespace : kube-system

K8sRequireIPv4PodCIDR : true

K8sRequireIPv6PodCIDR : false

K8sServiceCacheSize : 128

K8sServiceProxyName :

K8sSyncTimeout : 180000000000

K8sWatcherEndpointSelector : metadata.name!=kube-scheduler,metadata.name!=kube-controller-manager,metadata.name!=etcd-operator,metadata.name!=gcp-controller-manager

KVStore :

KVStoreOpt

KVstoreConnectivityTimeout : 120000000000

KVstoreKeepAliveInterval : 300000000000

KVstoreLeaseTTL : 900000000000

KVstoreMaxConsecutiveQuorumErrors : 2

KVstorePeriodicSync : 300000000000

KeepConfig : false

KernelHz : 1000

KubeProxyReplacement : strict

KubeProxyReplacementHealthzBindAddr: 0.0.0.0:10256

LBAffinityMapEntries : 0

LBBackendMapEntries : 262144

LBDevInheritIPAddr :

LBMaglevMapEntries : 0

LBMapEntries : 65536

LBRevNatEntries : 0

LBServiceMapEntries : 262144

LBSourceRangeMapEntries : 0

LabelPrefixFile :

Labels : []

LibDir : /var/lib/cilium

LoadBalancerDSRDispatch : opt

LoadBalancerDSRL4Xlate : frontend

LoadBalancerPreserveWorldID : true

LoadBalancerRSSv4

IP :

Mask : <nil>

LoadBalancerRSSv4CIDR :

LoadBalancerRSSv6

IP :

Mask : <nil>

LoadBalancerRSSv6CIDR :

LocalRouterIPv4 : 169.254.4.6

LocalRouterIPv6 : fe80::8893:b6ff:fe2c:7a0d

LogDriver : []

LogOpt

format : json

LogSystemLoadConfig : false

Logstash : false

LoopbackIPv4 : 169.254.42.1

MTU : 0

MaglevHashSeed : JLfvgnHc2kaSUFaI

MaglevTableSize : 16381

MaxControllerInterval : 0

MetricsConfig

APIInteractionsEnabled : Enabled

APILimiterAdjustmentFactor: Enabled

APILimiterProcessedRequests: Enabled

APILimiterProcessingDuration: Enabled

APILimiterRateLimit : Enabled

APILimiterRequestsInFlight: Enabled

APILimiterWaitDuration : Enabled

APILimiterWaitHistoryDuration: Disabled

ArpingRequestsTotalEnabled: Enabled

BPFMapOps : Enabled

BPFMapPressure : Disabled

BPFSyscallDurationEnabled : Disabled

ConntrackDumpResetsEnabled: Enabled

ConntrackGCDurationEnabled: Enabled

ConntrackGCKeyFallbacksEnabled: Enabled

ConntrackGCRunsEnabled : Enabled

ConntrackGCSizeEnabled : Enabled

ControllerRunsDurationEnabled: Enabled

ControllerRunsEnabled : Enabled

DropBytesEnabled : Enabled

DropCountEnabled : Enabled

EndpointPropagationDelayEnabled: Enabled

EndpointRegenerationCountEnabled: Enabled

EndpointRegenerationTimeStatsEnabled: Enabled

EndpointStateCountEnabled : Enabled

ErrorsWarningsEnabled : Enabled

EventLagK8sEnabled : Enabled

EventTSAPIEnabled : Disabled

EventTSContainerdEnabled : Disabled

EventTSEnabled : Enabled

FQDNActiveIPs : Enabled

FQDNActiveNames : Enabled

FQDNActiveZombiesConnections: Enabled

FQDNGarbageCollectorCleanedTotalEnabled: Enabled

FQDNSemaphoreRejectedTotal: Enabled

ForwardBytesEnabled : Enabled

IPCacheErrorsTotalEnabled : Enabled

IPCacheEventsTotalEnabled : Disabled

IdentityCountEnabled : Enabled

IpamEventEnabled : Enabled

KVStoreEventsQueueDurationEnabled: Enabled

KVStoreOperationsDurationEnabled: Enabled

KVStoreQuorumErrorsEnabled: Enabled

KubernetesAPICallsEnabled : Enabled

KubernetesAPIInteractionsEnabled: Enabled

KubernetesCNPStatusCompletionEnabled: Enabled

KubernetesEventProcessedEnabled: Enabled

KubernetesEventReceivedEnabled: Enabled

KubernetesTerminatingEndpointsEnabled: Enabled

KubernetesTimeBetweenEventsEnabled: Disabled

NoOpCounterVecEnabled : Enabled

NoOpObserverVecEnabled : Enabled

NodeConnectivityLatencyEnabled: Enabled

NodeConnectivityStatusEnabled: Enabled

PolicyCountEnabled : Enabled

PolicyEndpointStatusEnabled: Enabled

PolicyImplementationDelayEnabled: Enabled

PolicyImportErrorsEnabled : Enabled

PolicyRegenerationCountEnabled: Enabled

PolicyRegenerationTimeStatsEnabled: Enabled

PolicyRevisionEnabled : Disabled

ProxyDatapathUpdateTimeoutEnabled: Enabled

ProxyDeniedEnabled : Enabled

ProxyForwardedEnabled : Enabled

ProxyParseErrorsEnabled : Enabled

ProxyPolicyL7Enabled : Enabled

ProxyReceivedEnabled : Enabled

ProxyRedirectsEnabled : Enabled

ServicesCountEnabled : Enabled

SignalsHandledEnabled : Enabled

SubprocessStartEnabled : Enabled

TriggerPolicyUpdateCallDuration: Enabled

TriggerPolicyUpdateFolds : Enabled

TriggerPolicyUpdateTotal : Enabled

VersionMetric : Enabled

WireguardAgentTimeStatsEnabled: Enabled

WireguardPeersTotalEnabled: Enabled

WireguardTransferBytesTotalEnabled: Enabled

Monitor

cpus : 2

npages : 64

pagesize : 4096

MonitorAggregation : medium

MonitorAggregationFlags : 255

MonitorAggregationInterval : 5000000000

MonitorQueueSize : 2048

NATMapEntriesGlobal : 131072

NeighMapEntriesGlobal : 131072

NodePortAcceleration : disabled

NodePortAlg : random

NodePortBindProtection : true

NodePortMax : 32767

NodePortMin : 30000

NodePortMode : snat

NodePortNat46X64 : false

PProf : false

PProfAddress : localhost

PProfPort : 6060

PolicyAuditMode : false

PolicyMapEntries : 16384

PolicyQueueSize : 100

PolicyTriggerInterval : 1000000000

PopulateGCENICInfo : false

PreAllocateMaps : false

PrependIptablesChains : true

ProcFs : /proc

PrometheusServeAddr : :9990

ProxyConnectTimeout : 1

ProxyGID : 1337

ProxyMaxConnectionDuration : 0

ProxyMaxRequestsPerConnection : 0

ProxyPrometheusPort : 0

ReadCNIConfiguration :

ResetQueueMapping : true

RestoreState : true

RouteMetric : 0

RunDir : /var/run/cilium

RunMonitorAgent : true

SelectiveRegeneration : true

SidecarIstioProxyImage : cilium/istio_proxy

SizeofCTElement : 94

SizeofNATElement : 94

SizeofNeighElement : 24

SizeofSockRevElement : 52

SockRevNatEntries : 65536

SocketPath : /var/run/cilium/cilium.sock

SockopsEnable : false

StateDir : /var/run/cilium/state

TCFilterPriority : 1

THCPort : 0

THCSourceRanges : []

ToFQDNsEnableDNSCompression : true

ToFQDNsIdleConnectionGracePeriod : 0

ToFQDNsMaxDeferredConnectionDeletes: 10000

ToFQDNsMaxIPsPerHost : 50

ToFQDNsMinTTL : 3600

ToFQDNsPreCache :

ToFQDNsProxyPort : 0

TracePayloadlen : 128

Tunnel : disabled

TunnelPort : 8472

UseSingleClusterRoute : false

VLANBPFBypass : []

Version : false

VtepCIDRs : <nil>

VtepCidrMask :

VtepEndpoints : <nil>

VtepMACs : <nil>

WriteCNIConfigurationWhenReady :

XDPMode : disabled

k8s-configuration : /var/lib/kubelet/kubeconfig

k8s-endpoint : https://10.128.0.28:443

##### Read-write configurations #####

ConntrackAccounting : Enabled

ConntrackLocal : Disabled

Debug : Enabled

DebugLB : Enabled

DropNotification : Enabled

MonitorAggregationLevel : Medium

PolicyAuditMode : Disabled

PolicyTracing : Disabled

PolicyVerdictNotification : Enabled

TraceNotification : Enabled

MonitorNumPages : 64

PolicyEnforcement : default

Code language: Shell Session (shell)6: Check default endpoints

root@gke-test-cluster-1-default-pool-d0991fd4-c36l:/home/cilium# cilium endpoint list

level=info msg="Initializing Google metrics" subsys=metrics

ENDPOINT POLICY (ingress) POLICY (egress) IDENTITY LABELS (source:key[=value]) IPv6 IPv4 STATUS

ENFORCEMENT ENFORCEMENT

239 Disabled Disabled 47494 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system fe80::6009:f8ff:fea0:6524 10.88.0.2 ready

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=node-local-dns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=node-local-dns

414 Disabled Disabled 1 k8s:addon.gke.io/node-local-dns-ds-ready=true ready

k8s:cloud.google.com/gke-boot-disk=pd-balanced

k8s:cloud.google.com/gke-container-runtime=containerd

k8s:cloud.google.com/gke-cpu-scaling-level=2

k8s:cloud.google.com/gke-logging-variant=DEFAULT

k8s:cloud.google.com/gke-max-pods-per-node=110

k8s:cloud.google.com/gke-netd-ready=true

k8s:cloud.google.com/gke-nodepool=default-pool

k8s:cloud.google.com/gke-os-distribution=cos

k8s:cloud.google.com/gke-provisioning=standard

k8s:cloud.google.com/gke-stack-type=IPV4

k8s:cloud.google.com/machine-family=e2

k8s:cloud.google.com/private-node=false

k8s:node.kubernetes.io/instance-type=e2-medium

k8s:topology.gke.io/zone=us-central1-c

k8s:topology.kubernetes.io/region=us-central1

k8s:topology.kubernetes.io/zone=us-central1-c

reserved:host

540 Disabled Disabled 11550 k8s:app.kubernetes.io/name=collector fe80::c01:1bff:fe56:addf 10.88.0.7 ready

k8s:app.kubernetes.io/version=0.7.2

k8s:app=managed-prometheus-collector

k8s:io.cilium.k8s.namespace.labels.addonmanager.kubernetes.io/mode=Reconcile

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=gmp-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=collector

k8s:io.kubernetes.pod.namespace=gmp-system

1097 Disabled Disabled 13693 k8s:app.kubernetes.io/name=rule-evaluator fe80::8c6d:5aff:fe6f:d615 10.88.0.4 ready

k8s:app.kubernetes.io/version=0.7.2

k8s:app=managed-prometheus-rule-evaluator

k8s:io.cilium.k8s.namespace.labels.addonmanager.kubernetes.io/mode=Reconcile

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=gmp-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=collector

k8s:io.kubernetes.pod.namespace=gmp-system

2366 Disabled Disabled 29491 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system fe80::6c64:fdff:fe43:2a1d 10.88.0.5 ready

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=metrics-server

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=metrics-server

k8s:version=v0.5.2

3419 Disabled Disabled 3122 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system fe80::a87f:bbff:fe0a:f3e3 10.88.0.3 ready

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=konnectivity-agent

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=konnectivity-agent

Code language: Shell Session (shell)7: Query default identities created by cilium for the cluster

root@gke-test-cluster-1-default-pool-d0991fd4-c36l:/home/cilium# cilium identity list

level=info msg="Initializing Google metrics" subsys=metrics

ID LABELS

1 reserved:host

2 reserved:world

3 reserved:unmanaged

4 reserved:health

5 reserved:init

6 reserved:remote-node

7 reserved:kube-apiserver

reserved:remote-node

8 reserved:ingress

1062 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=glbc

k8s:name=glbc

3122 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=konnectivity-agent

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=konnectivity-agent

3572 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=kube-dns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns

11550 k8s:app.kubernetes.io/name=collector

k8s:app.kubernetes.io/version=0.7.2

k8s:app=managed-prometheus-collector

k8s:io.cilium.k8s.namespace.labels.addonmanager.kubernetes.io/mode=Reconcile

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=gmp-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=collector

k8s:io.kubernetes.pod.namespace=gmp-system

13693 k8s:app.kubernetes.io/name=rule-evaluator

k8s:app.kubernetes.io/version=0.7.2

k8s:app=managed-prometheus-rule-evaluator

k8s:io.cilium.k8s.namespace.labels.addonmanager.kubernetes.io/mode=Reconcile

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=gmp-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=collector

k8s:io.kubernetes.pod.namespace=gmp-system

16855 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=konnectivity-agent-cpha

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=konnectivity-agent-autoscaler

26679 k8s:app.kubernetes.io/component=operator

k8s:app.kubernetes.io/name=gmp-operator

k8s:app.kubernetes.io/part-of=gmp

k8s:app.kubernetes.io/version=0.7.2

k8s:app=managed-prometheus-operator

k8s:io.cilium.k8s.namespace.labels.addonmanager.kubernetes.io/mode=Reconcile

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=gmp-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=operator

k8s:io.kubernetes.pod.namespace=gmp-system

27686 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=antrea-cpha

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=antrea-controller-autoscaler

29491 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=metrics-server

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=metrics-server

k8s:version=v0.5.2

47494 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=node-local-dns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=node-local-dns

52269 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=kube-dns-autoscaler

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns-autoscaler

62176 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=event-exporter-sa

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=event-exporter

k8s:version=v0.4.0

62991 k8s:app.kubernetes.io/name=alertmanager

k8s:app.kubernetes.io/version=0.7.2

k8s:app=managed-prometheus-alertmanager

k8s:io.cilium.k8s.namespace.labels.addonmanager.kubernetes.io/mode=Reconcile

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=gmp-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=gmp-system

k8s:statefulset.kubernetes.io/pod-name=alertmanager-0

16777217 cidr:10.128.0.28/32

reserved:kube-apiserver

reserved:world

Code language: Shell Session (shell)8: Collect default lxc map created by cilium on the cluster

root@gke-test-cluster-1-default-pool-d0991fd4-c36l:/home/cilium# cilium map get cilium_lxc

level=info msg="Initializing Google metrics" subsys=metrics

Key Value State Error

10.88.0.5:0 id=2366 flags=0x0000 ifindex=9 mac=6E:64:FD:43:2A:1D nodemac=7E:59:02:D3:E6:CF sync

10.128.0.29:0 (localhost) sync

10.88.0.1:0 (localhost) sync

[fe80::6009:f8ff:fea0:6524]:0 id=239 flags=0x0000 ifindex=6 mac=62:09:F8:A0:65:24 nodemac=8A:A9:32:BA:03:60 sync

10.88.0.2:0 id=239 flags=0x0000 ifindex=6 mac=62:09:F8:A0:65:24 nodemac=8A:A9:32:BA:03:60 sync

10.88.0.3:0 id=3419 flags=0x0000 ifindex=7 mac=AA:7F:BB:0A:F3:E3 nodemac=62:BF:6E:26:11:BA sync

10.88.0.4:0 id=1097 flags=0x0000 ifindex=8 mac=8E:6D:5A:6F:D6:15 nodemac=DA:1C:8E:29:69:77 sync

[fe80::a87f:bbff:fe0a:f3e3]:0 id=3419 flags=0x0000 ifindex=7 mac=AA:7F:BB:0A:F3:E3 nodemac=62:BF:6E:26:11:BA sync

[fe80::8c6d:5aff:fe6f:d615]:0 id=1097 flags=0x0000 ifindex=8 mac=8E:6D:5A:6F:D6:15 nodemac=DA:1C:8E:29:69:77 sync

[fe80::6c64:fdff:fe43:2a1d]:0 id=2366 flags=0x0000 ifindex=9 mac=6E:64:FD:43:2A:1D nodemac=7E:59:02:D3:E6:CF sync

[fe80::c01:1bff:fe56:addf]:0 id=540 flags=0x0000 ifindex=11 mac=0E:01:1B:56:AD:DF nodemac=46:26:D9:B4:9F:AC sync

10.88.0.7:0 id=540 flags=0x0000 ifindex=11 mac=0E:01:1B:56:AD:DF nodemac=46:26:D9:B4:9F:AC sync

Code language: Shell Session (shell)Note: node local DNS IP 10.88.0.2 is mapped to the endpoint 239

9: Collect default ipcache map created by cilium on the cluster

root@gke-test-cluster-1-default-pool-d0991fd4-c36l:/home/cilium# cilium map get cilium_ipcache

level=info msg="Initializing Google metrics" subsys=metrics

Key Value State Error

fe80::c0f6:5fff:fe17:6323/128 identity=11550 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

fe80::3c76:2aff:fec4:4dc6/128 identity=1062 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

fe80::583d:c5ff:fed3:3864/128 identity=26679 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

fe80::8c6d:5aff:fe6f:d615/128 identity=13693 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

fe80::ac7c:71ff:fe0f:e8ed/128 identity=47494 encryptkey=0 tunnelendpoint=10.128.0.31 nodeid=2752 sync

10.88.2.2/32 identity=47494 encryptkey=0 tunnelendpoint=10.128.0.31 nodeid=2752 sync

10.88.2.3/32 identity=3122 encryptkey=0 tunnelendpoint=10.128.0.31 nodeid=2752 sync

10.88.0.7/32 identity=11550 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.128.0.31/32 identity=6 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

169.254.4.6/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.128.0.29/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.128.0.28/32 identity=16777217 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.88.1.6/32 identity=3122 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

10.88.1.11/32 identity=47494 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

fe80::3c17:e1ff:fedd:537a/128 identity=62176 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

10.88.0.1/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

34.132.77.122/32 identity=6 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.88.0.2/32 identity=47494 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

fe80::90b0:19ff:fe0f:9a95/128 identity=27686 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

fe80::c022:5bff:fede:7599/128 identity=3572 encryptkey=0 tunnelendpoint=10.128.0.31 nodeid=2752 sync

10.88.0.4/32 identity=13693 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

fe80::205d:e6ff:fe21:75e0/128 identity=62991 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

10.88.1.4/32 identity=1062 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

10.88.1.2/32 identity=16855 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

fe80::2c1b:85ff:fe5d:d117/128 identity=47494 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

10.88.1.9/32 identity=3572 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

fe80::6009:f8ff:fea0:6524/128 identity=47494 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

34.172.232.136/32 identity=6 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.88.1.10/32 identity=26679 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

10.88.1.3/32 identity=27686 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

10.88.2.4/32 identity=3572 encryptkey=0 tunnelendpoint=10.128.0.31 nodeid=2752 sync

fe80::6c64:fdff:fe43:2a1d/128 identity=29491 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

fe80::940d:dcff:fe20:4de5/128 identity=3122 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

0.0.0.0/0 identity=2 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.88.0.3/32 identity=3122 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.88.1.13/32 identity=62991 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

10.88.1.8/32 identity=62176 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

10.88.1.7/32 identity=52269 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

fe80::100f:a6ff:fe1d:efe6/128 identity=52269 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

10.88.0.5/32 identity=29491 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.88.2.6/32 identity=11550 encryptkey=0 tunnelendpoint=10.128.0.31 nodeid=2752 sync

fe80::30e3:a7ff:fe48:bbe8/128 identity=16855 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

34.69.188.144/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

fe80::6010:f5ff:fe28:493b/128 identity=3572 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

10.88.1.15/32 identity=11550 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

fe80::f8f0:b1ff:feda:2203/128 identity=3122 encryptkey=0 tunnelendpoint=10.128.0.31 nodeid=2752 sync

fe80::a87f:bbff:fe0a:f3e3/128 identity=3122 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

fe80::183b:48ff:fea1:8107/128 identity=11550 encryptkey=0 tunnelendpoint=10.128.0.31 nodeid=2752 sync

fe80::c01:1bff:fe56:addf/128 identity=11550 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.128.0.30/32 identity=6 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

Code language: Shell Session (shell)Note: the node local DNS IP 10.88.0.2 is mapped to the identity 47494

10: Install JupyterHub on the cluster as described in my previous post

11: Check the endpoints, identities and maps again so that you can identify the impact of the JupyterHub release. We are interested in the hub component to troubleshoot the reported issue

root@gke-test-cluster-1-default-pool-d0991fd4-c36l:/home/cilium# cilium endpoint list

level=info msg="Initializing Google metrics" subsys=metrics

ENDPOINT POLICY (ingress) POLICY (egress) IDENTITY LABELS (source:key[=value]) IPv6 IPv4 STATUS

ENFORCEMENT ENFORCEMENT

33 Disabled Disabled 15194 k8s:app=jupyterhub fe80::bc40:72ff:fe8c:7cab 10.88.0.10 ready

k8s:component=continuous-image-puller

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=testjupyterhubdev

k8s:io.cilium.k8s.namespace.labels.name=testjupyterhubdev

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=testjupyterhubdev

k8s:release=testjupyterhub

166 Enabled Enabled 6621 k8s:app=jupyterhub fe80::d8d7:76ff:fef6:4773 10.88.0.11 ready

k8s:component=proxy

k8s:hub.jupyter.org/network-access-hub=true

k8s:hub.jupyter.org/network-access-singleuser=true

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=testjupyterhubdev

k8s:io.cilium.k8s.namespace.labels.name=testjupyterhubdev

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=testjupyterhubdev

k8s:release=testjupyterhub

239 Disabled Disabled 47494 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system fe80::6009:f8ff:fea0:6524 10.88.0.2 ready

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=node-local-dns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=node-local-dns

414 Disabled Disabled 1 k8s:addon.gke.io/node-local-dns-ds-ready=true ready

k8s:cloud.google.com/gke-boot-disk=pd-balanced

k8s:cloud.google.com/gke-container-runtime=containerd

k8s:cloud.google.com/gke-cpu-scaling-level=2

k8s:cloud.google.com/gke-logging-variant=DEFAULT

k8s:cloud.google.com/gke-max-pods-per-node=110

k8s:cloud.google.com/gke-netd-ready=true

k8s:cloud.google.com/gke-nodepool=default-pool

k8s:cloud.google.com/gke-os-distribution=cos

k8s:cloud.google.com/gke-provisioning=standard

k8s:cloud.google.com/gke-stack-type=IPV4

k8s:cloud.google.com/machine-family=e2

k8s:cloud.google.com/private-node=false

k8s:node.kubernetes.io/instance-type=e2-medium

k8s:topology.gke.io/zone=us-central1-c

k8s:topology.kubernetes.io/region=us-central1

k8s:topology.kubernetes.io/zone=us-central1-c

reserved:host

540 Disabled Disabled 11550 k8s:app.kubernetes.io/name=collector fe80::c01:1bff:fe56:addf 10.88.0.7 ready

k8s:app.kubernetes.io/version=0.7.2

k8s:app=managed-prometheus-collector

k8s:io.cilium.k8s.namespace.labels.addonmanager.kubernetes.io/mode=Reconcile

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=gmp-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=collector

k8s:io.kubernetes.pod.namespace=gmp-system

638 Disabled Disabled 9520 k8s:app=jupyterhub fe80::e087:14ff:fecf:6e59 10.88.0.12 ready

k8s:component=user-scheduler

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=testjupyterhubdev

k8s:io.cilium.k8s.namespace.labels.name=testjupyterhubdev

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=user-scheduler

k8s:io.kubernetes.pod.namespace=testjupyterhubdev

k8s:release=testjupyterhub

1097 Disabled Disabled 13693 k8s:app.kubernetes.io/name=rule-evaluator fe80::8c6d:5aff:fe6f:d615 10.88.0.4 ready

k8s:app.kubernetes.io/version=0.7.2

k8s:app=managed-prometheus-rule-evaluator

k8s:io.cilium.k8s.namespace.labels.addonmanager.kubernetes.io/mode=Reconcile

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=gmp-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=collector

k8s:io.kubernetes.pod.namespace=gmp-system

2366 Disabled Disabled 29491 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system fe80::6c64:fdff:fe43:2a1d 10.88.0.5 ready

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=metrics-server

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=metrics-server

k8s:version=v0.5.2

3419 Disabled Disabled 3122 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system fe80::a87f:bbff:fe0a:f3e3 10.88.0.3 ready

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=konnectivity-agent

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=konnectivity-agent

3535 Enabled Enabled 34776 k8s:app=jupyterhub fe80::14a4:a6ff:feee:dc72 10.88.0.13 ready

k8s:component=hub

k8s:hub.jupyter.org/network-access-proxy-api=true

k8s:hub.jupyter.org/network-access-proxy-http=true

k8s:hub.jupyter.org/network-access-singleuser=true

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=testjupyterhubdev

k8s:io.cilium.k8s.namespace.labels.name=testjupyterhubdev

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=hub

k8s:io.kubernetes.pod.namespace=testjupyterhubdev

k8s:release=testjupyterhub

Code language: Shell Session (shell)root@gke-test-cluster-1-default-pool-d0991fd4-c36l:/home/cilium# cilium identity list

level=info msg="Initializing Google metrics" subsys=metrics

ID LABELS

1 reserved:host

2 reserved:world

3 reserved:unmanaged

4 reserved:health

5 reserved:init

6 reserved:remote-node

7 reserved:kube-apiserver

reserved:remote-node

8 reserved:ingress

1062 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=glbc

k8s:name=glbc

3122 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=konnectivity-agent

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=konnectivity-agent

3572 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=kube-dns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns

6621 k8s:app=jupyterhub

k8s:component=proxy

k8s:hub.jupyter.org/network-access-hub=true

k8s:hub.jupyter.org/network-access-singleuser=true

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=testjupyterhubdev

k8s:io.cilium.k8s.namespace.labels.name=testjupyterhubdev

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=testjupyterhubdev

k8s:release=testjupyterhub

9520 k8s:app=jupyterhub

k8s:component=user-scheduler

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=testjupyterhubdev

k8s:io.cilium.k8s.namespace.labels.name=testjupyterhubdev

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=user-scheduler

k8s:io.kubernetes.pod.namespace=testjupyterhubdev

k8s:release=testjupyterhub

11550 k8s:app.kubernetes.io/name=collector

k8s:app.kubernetes.io/version=0.7.2

k8s:app=managed-prometheus-collector

k8s:io.cilium.k8s.namespace.labels.addonmanager.kubernetes.io/mode=Reconcile

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=gmp-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=collector

k8s:io.kubernetes.pod.namespace=gmp-system

11741 k8s:app=jupyterhub

k8s:batch.kubernetes.io/controller-uid=f1a1cd5d-7dd9-471b-877f-9a8313383345

k8s:batch.kubernetes.io/job-name=hook-image-awaiter

k8s:component=image-puller

k8s:controller-uid=f1a1cd5d-7dd9-471b-877f-9a8313383345

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=testjupyterhubdev

k8s:io.cilium.k8s.namespace.labels.name=testjupyterhubdev

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=hook-image-awaiter

k8s:io.kubernetes.pod.namespace=testjupyterhubdev

k8s:job-name=hook-image-awaiter

k8s:release=testjupyterhub

13693 k8s:app.kubernetes.io/name=rule-evaluator

k8s:app.kubernetes.io/version=0.7.2

k8s:app=managed-prometheus-rule-evaluator

k8s:io.cilium.k8s.namespace.labels.addonmanager.kubernetes.io/mode=Reconcile

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=gmp-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=collector

k8s:io.kubernetes.pod.namespace=gmp-system

15194 k8s:app=jupyterhub

k8s:component=continuous-image-puller

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=testjupyterhubdev

k8s:io.cilium.k8s.namespace.labels.name=testjupyterhubdev

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=testjupyterhubdev

k8s:release=testjupyterhub

16855 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=konnectivity-agent-cpha

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=konnectivity-agent-autoscaler

23629 k8s:app=jupyterhub

k8s:component=hook-image-puller

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=testjupyterhubdev

k8s:io.cilium.k8s.namespace.labels.name=testjupyterhubdev

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=testjupyterhubdev

k8s:release=testjupyterhub

26679 k8s:app.kubernetes.io/component=operator

k8s:app.kubernetes.io/name=gmp-operator

k8s:app.kubernetes.io/part-of=gmp

k8s:app.kubernetes.io/version=0.7.2

k8s:app=managed-prometheus-operator

k8s:io.cilium.k8s.namespace.labels.addonmanager.kubernetes.io/mode=Reconcile

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=gmp-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=operator

k8s:io.kubernetes.pod.namespace=gmp-system

27686 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=antrea-cpha

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=antrea-controller-autoscaler

29491 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=metrics-server

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=metrics-server

k8s:version=v0.5.2

34776 k8s:app=jupyterhub

k8s:component=hub

k8s:hub.jupyter.org/network-access-proxy-api=true

k8s:hub.jupyter.org/network-access-proxy-http=true

k8s:hub.jupyter.org/network-access-singleuser=true

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=testjupyterhubdev

k8s:io.cilium.k8s.namespace.labels.name=testjupyterhubdev

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=hub

k8s:io.kubernetes.pod.namespace=testjupyterhubdev

k8s:release=testjupyterhub

47494 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=node-local-dns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=node-local-dns

52269 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=kube-dns-autoscaler

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns-autoscaler

62176 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=event-exporter-sa

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=event-exporter

k8s:version=v0.4.0

62991 k8s:app.kubernetes.io/name=alertmanager

k8s:app.kubernetes.io/version=0.7.2

k8s:app=managed-prometheus-alertmanager

k8s:io.cilium.k8s.namespace.labels.addonmanager.kubernetes.io/mode=Reconcile

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=gmp-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=gmp-system

k8s:statefulset.kubernetes.io/pod-name=alertmanager-0

16777217 cidr:10.128.0.28/32

reserved:kube-apiserver

reserved:world

16777218 cidr:10.0.0.0/8

reserved:world

16777219 cidr:172.16.0.0/12

reserved:world

16777220 cidr:192.168.0.0/16

reserved:world

16777221 cidr:64.0.0.0/2

reserved:world

16777222 cidr:32.0.0.0/3

reserved:world

16777223 cidr:16.0.0.0/4

reserved:world

16777224 cidr:0.0.0.0/5

reserved:world

16777225 cidr:12.0.0.0/6

reserved:world

16777226 cidr:8.0.0.0/7

reserved:world

16777227 cidr:11.0.0.0/8

reserved:world

16777228 cidr:128.0.0.0/3

reserved:world

16777229 cidr:176.0.0.0/4

reserved:world

16777230 cidr:160.0.0.0/5

reserved:world

16777231 cidr:174.0.0.0/7

reserved:world

16777232 cidr:173.0.0.0/8

reserved:world

16777233 cidr:172.128.0.0/9

reserved:world

16777234 cidr:172.64.0.0/10

reserved:world

16777235 cidr:172.32.0.0/11

reserved:world

16777236 cidr:172.0.0.0/12

reserved:world

16777237 cidr:224.0.0.0/3

reserved:world

16777238 cidr:208.0.0.0/4

reserved:world

16777239 cidr:200.0.0.0/5

reserved:world

16777240 cidr:196.0.0.0/6

reserved:world

16777241 cidr:194.0.0.0/7

reserved:world

16777242 cidr:193.0.0.0/8

reserved:world

16777243 cidr:192.0.0.0/9

reserved:world

16777244 cidr:192.192.0.0/10

reserved:world

16777245 cidr:192.128.0.0/11

reserved:world

16777246 cidr:192.176.0.0/12

reserved:world

16777247 cidr:192.160.0.0/13

reserved:world

16777248 cidr:192.172.0.0/14

reserved:world

16777249 cidr:192.170.0.0/15

reserved:world

16777250 cidr:192.169.0.0/16

reserved:world

16777251 cidr:170.0.0.0/7

reserved:world

16777252 cidr:168.0.0.0/8

reserved:world

16777253 cidr:169.0.0.0/9

reserved:world

16777254 cidr:169.128.0.0/10

reserved:world

16777255 cidr:169.192.0.0/11

reserved:world

16777256 cidr:169.224.0.0/12

reserved:world

16777257 cidr:169.240.0.0/13

reserved:world

16777258 cidr:169.248.0.0/14

reserved:world

16777259 cidr:169.252.0.0/15

reserved:world

16777260 cidr:169.255.0.0/16

reserved:world

16777261 cidr:169.254.0.0/17

reserved:world

16777262 cidr:169.254.192.0/18

reserved:world

16777263 cidr:169.254.128.0/19

reserved:world

16777264 cidr:169.254.176.0/20

reserved:world

16777265 cidr:169.254.160.0/21

reserved:world

16777266 cidr:169.254.172.0/22

reserved:world

16777267 cidr:169.254.170.0/23

reserved:world

16777268 cidr:169.254.168.0/24

reserved:world

16777269 cidr:169.254.169.0/25

reserved:world

16777270 cidr:169.254.169.128/26

reserved:world

16777271 cidr:169.254.169.192/27

reserved:world

16777272 cidr:169.254.169.224/28

reserved:world

16777273 cidr:169.254.169.240/29

reserved:world

16777274 cidr:169.254.169.248/30

reserved:world

16777275 cidr:169.254.169.252/31

reserved:world

16777276 cidr:169.254.169.255/32

reserved:world

16777277 cidr:169.254.169.254/32

reserved:world

Code language: Shell Session (shell)root@gke-test-cluster-1-default-pool-d0991fd4-c36l:/home/cilium# cilium map get cilium_lxc

level=info msg="Initializing Google metrics" subsys=metrics

Key Value State Error

[fe80::d8d7:76ff:fef6:4773]:0 id=166 flags=0x0000 ifindex=15 mac=DA:D7:76:F6:47:73 nodemac=6A:4C:3C:00:78:27 sync

10.128.0.29:0 (localhost) sync

[fe80::6009:f8ff:fea0:6524]:0 id=239 flags=0x0000 ifindex=6 mac=62:09:F8:A0:65:24 nodemac=8A:A9:32:BA:03:60 sync

10.88.0.2:0 id=239 flags=0x0000 ifindex=6 mac=62:09:F8:A0:65:24 nodemac=8A:A9:32:BA:03:60 sync

10.88.0.4:0 id=1097 flags=0x0000 ifindex=8 mac=8E:6D:5A:6F:D6:15 nodemac=DA:1C:8E:29:69:77 sync

[fe80::a87f:bbff:fe0a:f3e3]:0 id=3419 flags=0x0000 ifindex=7 mac=AA:7F:BB:0A:F3:E3 nodemac=62:BF:6E:26:11:BA sync

[fe80::6c64:fdff:fe43:2a1d]:0 id=2366 flags=0x0000 ifindex=9 mac=6E:64:FD:43:2A:1D nodemac=7E:59:02:D3:E6:CF sync

10.88.0.12:0 id=638 flags=0x0000 ifindex=16 mac=E2:87:14:CF:6E:59 nodemac=1A:3E:66:05:72:08 sync

10.88.0.13:0 id=3535 flags=0x0000 ifindex=17 mac=16:A4:A6:EE:DC:72 nodemac=56:84:79:4D:24:23 sync

[fe80::14a4:a6ff:feee:dc72]:0 id=3535 flags=0x0000 ifindex=17 mac=16:A4:A6:EE:DC:72 nodemac=56:84:79:4D:24:23 sync

10.88.0.1:0 (localhost) sync

10.88.0.3:0 id=3419 flags=0x0000 ifindex=7 mac=AA:7F:BB:0A:F3:E3 nodemac=62:BF:6E:26:11:BA sync

10.88.0.5:0 id=2366 flags=0x0000 ifindex=9 mac=6E:64:FD:43:2A:1D nodemac=7E:59:02:D3:E6:CF sync

[fe80::e087:14ff:fecf:6e59]:0 id=638 flags=0x0000 ifindex=16 mac=E2:87:14:CF:6E:59 nodemac=1A:3E:66:05:72:08 sync

10.88.0.10:0 id=33 flags=0x0000 ifindex=14 mac=BE:40:72:8C:7C:AB nodemac=22:02:CB:0E:D0:C0 sync

10.88.0.11:0 id=166 flags=0x0000 ifindex=15 mac=DA:D7:76:F6:47:73 nodemac=6A:4C:3C:00:78:27 sync

[fe80::8c6d:5aff:fe6f:d615]:0 id=1097 flags=0x0000 ifindex=8 mac=8E:6D:5A:6F:D6:15 nodemac=DA:1C:8E:29:69:77 sync

[fe80::c01:1bff:fe56:addf]:0 id=540 flags=0x0000 ifindex=11 mac=0E:01:1B:56:AD:DF nodemac=46:26:D9:B4:9F:AC sync

10.88.0.7:0 id=540 flags=0x0000 ifindex=11 mac=0E:01:1B:56:AD:DF nodemac=46:26:D9:B4:9F:AC sync

[fe80::bc40:72ff:fe8c:7cab]:0 id=33 flags=0x0000 ifindex=14 mac=BE:40:72:8C:7C:AB nodemac=22:02:CB:0E:D0:C0 sync

Code language: Shell Session (shell)root@gke-test-cluster-1-default-pool-d0991fd4-c36l:/home/cilium# cilium map get cilium_ipcache

level=info msg="Initializing Google metrics" subsys=metrics

Key Value State Error

10.128.0.31/32 identity=6 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

192.192.0.0/10 identity=16777244 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

192.172.0.0/14 identity=16777248 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

169.254.168.0/24 identity=16777268 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

fe80::bc40:72ff:fe8c:7cab/128 identity=15194 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

fe80::205d:e6ff:fe21:75e0/128 identity=62991 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

169.254.169.252/31 identity=16777275 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

34.132.77.122/32 identity=6 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.128.0.28/32 identity=16777217 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.88.1.6/32 identity=3122 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

10.88.1.11/32 identity=47494 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

fe80::3c17:e1ff:fedd:537a/128 identity=62176 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

fe80::c022:5bff:fede:7599/128 identity=3572 encryptkey=0 tunnelendpoint=10.128.0.31 nodeid=2752 sync

10.88.0.11/32 identity=6621 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

34.172.232.136/32 identity=6 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.88.1.9/32 identity=3572 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

172.128.0.0/9 identity=16777233 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

169.252.0.0/15 identity=16777259 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

12.0.0.0/6 identity=16777225 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

193.0.0.0/8 identity=16777242 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

0.0.0.0/0 identity=2 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

172.32.0.0/11 identity=16777235 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

0.0.0.0/5 identity=16777224 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

fe80::a87f:bbff:fe0a:f3e3/128 identity=3122 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

fe80::183b:48ff:fea1:8107/128 identity=11550 encryptkey=0 tunnelendpoint=10.128.0.31 nodeid=2752 sync

169.254.160.0/21 identity=16777265 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

200.0.0.0/5 identity=16777239 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

169.224.0.0/12 identity=16777256 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

169.0.0.0/9 identity=16777253 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

169.254.172.0/22 identity=16777266 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

64.0.0.0/2 identity=16777221 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

196.0.0.0/6 identity=16777240 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.88.0.12/32 identity=9520 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

fe80::e087:14ff:fecf:6e59/128 identity=9520 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.128.0.29/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

fe80::90b0:19ff:fe0f:9a95/128 identity=27686 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

fe80::d8d7:76ff:fef6:4773/128 identity=6621 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.88.1.2/32 identity=16855 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

fe80::6009:f8ff:fea0:6524/128 identity=47494 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

169.254.192.0/18 identity=16777262 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

169.192.0.0/11 identity=16777255 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.88.0.10/32 identity=15194 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

169.254.169.255/32 identity=16777276 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

169.254.128.0/19 identity=16777263 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.88.1.8/32 identity=62176 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

169.248.0.0/14 identity=16777258 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

16.0.0.0/4 identity=16777223 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

8.0.0.0/7 identity=16777226 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.88.1.17/32 identity=15194 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

fe80::30e3:a7ff:fe48:bbe8/128 identity=16855 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

10.88.0.5/32 identity=29491 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

192.170.0.0/15 identity=16777249 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

169.254.0.0/17 identity=16777261 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

fe80::14a4:a6ff:feee:dc72/128 identity=34776 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.128.0.30/32 identity=6 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

fe80::c01:1bff:fe56:addf/128 identity=11550 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

32.0.0.0/3 identity=16777222 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

169.254.169.224/28 identity=16777272 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

169.254.169.240/29 identity=16777273 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

169.128.0.0/10 identity=16777254 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

fe80::ac7c:71ff:fe0f:e8ed/128 identity=47494 encryptkey=0 tunnelendpoint=10.128.0.31 nodeid=2752 sync

fe80::c0f6:5fff:fe17:6323/128 identity=11550 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

fe80::3c76:2aff:fec4:4dc6/128 identity=1062 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

fe80::583d:c5ff:fed3:3864/128 identity=26679 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

fe80::8c6d:5aff:fe6f:d615/128 identity=13693 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

11.0.0.0/8 identity=16777227 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.88.0.7/32 identity=11550 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

208.0.0.0/4 identity=16777238 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

192.169.0.0/16 identity=16777250 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

169.254.169.192/27 identity=16777271 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

192.168.0.0/16 identity=16777220 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

169.254.169.248/30 identity=16777274 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

169.254.4.6/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.88.0.4/32 identity=13693 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

176.0.0.0/4 identity=16777229 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

170.0.0.0/7 identity=16777251 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

128.0.0.0/3 identity=16777228 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

fe80::2c1b:85ff:fe5d:d117/128 identity=47494 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

194.0.0.0/7 identity=16777241 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

172.0.0.0/12 identity=16777236 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

fe80::68a6:82ff:feac:f6c7/128 identity=15194 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

fe80::94de:b5ff:fea5:cfd7/128 identity=15194 encryptkey=0 tunnelendpoint=10.128.0.31 nodeid=2752 sync

10.88.1.10/32 identity=26679 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

10.88.2.4/32 identity=3572 encryptkey=0 tunnelendpoint=10.128.0.31 nodeid=2752 sync

169.254.169.0/25 identity=16777269 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

160.0.0.0/5 identity=16777230 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.0.0.0/8 identity=16777218 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.88.2.9/32 identity=9520 encryptkey=0 tunnelendpoint=10.128.0.31 nodeid=2752 sync

10.88.0.3/32 identity=3122 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

168.0.0.0/8 identity=16777252 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

192.160.0.0/13 identity=16777247 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

192.176.0.0/12 identity=16777246 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

169.254.169.128/26 identity=16777270 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.88.0.13/32 identity=34776 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.88.2.6/32 identity=11550 encryptkey=0 tunnelendpoint=10.128.0.31 nodeid=2752 sync

34.69.188.144/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

fe80::6010:f5ff:fe28:493b/128 identity=3572 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

10.88.1.15/32 identity=11550 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

fe80::f8f0:b1ff:feda:2203/128 identity=3122 encryptkey=0 tunnelendpoint=10.128.0.31 nodeid=2752 sync

192.0.0.0/9 identity=16777243 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.88.2.8/32 identity=15194 encryptkey=0 tunnelendpoint=10.128.0.31 nodeid=2752 sync

10.88.2.2/32 identity=47494 encryptkey=0 tunnelendpoint=10.128.0.31 nodeid=2752 sync

10.88.2.3/32 identity=3122 encryptkey=0 tunnelendpoint=10.128.0.31 nodeid=2752 sync

169.254.169.254/32 identity=16777277 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

224.0.0.0/3 identity=16777237 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

174.0.0.0/7 identity=16777231 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.88.0.1/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.88.1.4/32 identity=1062 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

10.88.0.2/32 identity=47494 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

169.240.0.0/13 identity=16777257 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

192.128.0.0/11 identity=16777245 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

169.254.176.0/20 identity=16777264 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

fe80::940d:dcff:fe20:4de5/128 identity=3122 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

10.88.1.3/32 identity=27686 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

fe80::6c64:fdff:fe43:2a1d/128 identity=29491 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

10.88.1.13/32 identity=62991 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

10.88.1.7/32 identity=52269 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

fe80::100f:a6ff:fe1d:efe6/128 identity=52269 encryptkey=0 tunnelendpoint=10.128.0.30 nodeid=56763 sync

173.0.0.0/8 identity=16777232 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

172.64.0.0/10 identity=16777234 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

fe80::d408:bdff:fe2f:edca/128 identity=9520 encryptkey=0 tunnelendpoint=10.128.0.31 nodeid=2752 sync

172.16.0.0/12 identity=16777219 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

169.254.170.0/23 identity=16777267 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

169.255.0.0/16 identity=16777260 encryptkey=0 tunnelendpoint=0.0.0.0 nodeid=0 sync

Code language: Shell Session (shell)12: Obtain hub’s applied network policy so that you can compare the policy created by JupyterHub’s core network policy with any updates to the policy that might resolve the issue

root@gke-test-cluster-1-default-pool-d0991fd4-c36l:/home/cilium# cilium bpf policy get 3535

/sys/fs/bpf/tc/globals/cilium_policy_03535:

POLICY DIRECTION LABELS (source:key[=value]) PORT/PROTO PROXY PORT BYTES PACKETS

Allow Ingress reserved:host ANY NONE 5328 72

Allow Ingress k8s:app=jupyterhub 8081/TCP NONE 0 0

k8s:component=proxy

k8s:hub.jupyter.org/network-access-hub=true

k8s:hub.jupyter.org/network-access-singleuser=true

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=testjupyterhubdev

k8s:io.cilium.k8s.namespace.labels.name=testjupyterhubdev

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=testjupyterhubdev

k8s:release=testjupyterhub

Allow Egress reserved:host 53/TCP NONE 0 0

Allow Egress reserved:host ANY NONE 3888 72

Allow Egress reserved:host 53/UDP NONE 0 0

Allow Egress reserved:remote-node 53/TCP NONE 0 0

Allow Egress reserved:remote-node ANY NONE 0 0

Allow Egress reserved:remote-node 53/UDP NONE 0 0

Allow Egress reserved:kube-apiserver ANY NONE 0 0

reserved:remote-node

Allow Egress reserved:kube-apiserver 53/UDP NONE 0 0

reserved:remote-node

Allow Egress reserved:kube-apiserver 53/TCP NONE 0 0

reserved:remote-node

Allow Egress k8s:app=jupyterhub 8001/TCP NONE 0 0

k8s:component=proxy

k8s:hub.jupyter.org/network-access-hub=true

k8s:hub.jupyter.org/network-access-singleuser=true

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=testjupyterhubdev

k8s:io.cilium.k8s.namespace.labels.name=testjupyterhubdev

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=testjupyterhubdev

k8s:release=testjupyterhub

Allow Egress cidr:10.128.0.28/32 ANY NONE 0 0

reserved:kube-apiserver

reserved:world

Allow Egress cidr:10.128.0.28/32 53/TCP NONE 0 0

reserved:kube-apiserver

reserved:world

Allow Egress cidr:10.128.0.28/32 53/UDP NONE 0 0

reserved:kube-apiserver

reserved:world

Allow Egress cidr:10.0.0.0/8 53/TCP NONE 0 0

reserved:world

Allow Egress cidr:10.0.0.0/8 53/UDP NONE 0 0

reserved:world

Allow Egress cidr:10.0.0.0/8 ANY NONE 0 0

reserved:world

Allow Egress cidr:172.16.0.0/12 53/TCP NONE 0 0

reserved:world

Allow Egress cidr:172.16.0.0/12 ANY NONE 0 0

reserved:world

Allow Egress cidr:172.16.0.0/12 53/UDP NONE 0 0

reserved:world

Allow Egress cidr:192.168.0.0/16 ANY NONE 0 0

reserved:world

Allow Egress cidr:192.168.0.0/16 53/UDP NONE 0 0

reserved:world

Allow Egress cidr:192.168.0.0/16 53/TCP NONE 0 0

reserved:world

Allow Egress cidr:64.0.0.0/2 ANY NONE 0 0

reserved:world

Allow Egress cidr:32.0.0.0/3 ANY NONE 0 0

reserved:world

Allow Egress cidr:16.0.0.0/4 ANY NONE 0 0

reserved:world

Allow Egress cidr:0.0.0.0/5 ANY NONE 0 0

reserved:world

Allow Egress cidr:12.0.0.0/6 ANY NONE 0 0

reserved:world

Allow Egress cidr:8.0.0.0/7 ANY NONE 0 0

reserved:world

Allow Egress cidr:11.0.0.0/8 ANY NONE 0 0

reserved:world

Allow Egress cidr:128.0.0.0/3 ANY NONE 0 0

reserved:world

Allow Egress cidr:176.0.0.0/4 ANY NONE 0 0

reserved:world

Allow Egress cidr:160.0.0.0/5 ANY NONE 0 0

reserved:world

Allow Egress cidr:174.0.0.0/7 ANY NONE 0 0

reserved:world

Allow Egress cidr:173.0.0.0/8 ANY NONE 0 0

reserved:world

Allow Egress cidr:172.128.0.0/9 ANY NONE 0 0

reserved:world

Allow Egress cidr:172.64.0.0/10 ANY NONE 0 0

reserved:world

Allow Egress cidr:172.32.0.0/11 ANY NONE 0 0

reserved:world

Allow Egress cidr:172.0.0.0/12 ANY NONE 0 0

reserved:world

Allow Egress cidr:224.0.0.0/3 ANY NONE 0 0

reserved:world

Allow Egress cidr:208.0.0.0/4 ANY NONE 0 0

reserved:world

Allow Egress cidr:200.0.0.0/5 ANY NONE 0 0

reserved:world

Allow Egress cidr:196.0.0.0/6 ANY NONE 0 0

reserved:world

Allow Egress cidr:194.0.0.0/7 ANY NONE 0 0

reserved:world

Allow Egress cidr:193.0.0.0/8 ANY NONE 0 0

reserved:world

Allow Egress cidr:192.0.0.0/9 ANY NONE 0 0

reserved:world

Allow Egress cidr:192.192.0.0/10 ANY NONE 0 0

reserved:world

Allow Egress cidr:192.128.0.0/11 ANY NONE 0 0

reserved:world

Allow Egress cidr:192.176.0.0/12 ANY NONE 0 0

reserved:world

Allow Egress cidr:192.160.0.0/13 ANY NONE 0 0

reserved:world

Allow Egress cidr:192.172.0.0/14 ANY NONE 0 0

reserved:world

Allow Egress cidr:192.170.0.0/15 ANY NONE 0 0

reserved:world

Allow Egress cidr:192.169.0.0/16 ANY NONE 0 0

reserved:world

Allow Egress cidr:170.0.0.0/7 ANY NONE 0 0

reserved:world

Allow Egress cidr:168.0.0.0/8 ANY NONE 0 0

reserved:world

Allow Egress cidr:169.0.0.0/9 ANY NONE 0 0

reserved:world

Allow Egress cidr:169.128.0.0/10 ANY NONE 0 0

reserved:world

Allow Egress cidr:169.192.0.0/11 ANY NONE 0 0

reserved:world

Allow Egress cidr:169.224.0.0/12 ANY NONE 0 0

reserved:world

Allow Egress cidr:169.240.0.0/13 ANY NONE 0 0

reserved:world

Allow Egress cidr:169.248.0.0/14 ANY NONE 0 0

reserved:world

Allow Egress cidr:169.252.0.0/15 ANY NONE 0 0

reserved:world

Allow Egress cidr:169.255.0.0/16 ANY NONE 0 0

reserved:world

Allow Egress cidr:169.254.0.0/17 ANY NONE 0 0

reserved:world

Allow Egress cidr:169.254.192.0/18 ANY NONE 0 0

reserved:world

Allow Egress cidr:169.254.128.0/19 ANY NONE 0 0

reserved:world

Allow Egress cidr:169.254.176.0/20 ANY NONE 0 0

reserved:world

Allow Egress cidr:169.254.160.0/21 ANY NONE 0 0

reserved:world

Allow Egress cidr:169.254.172.0/22 ANY NONE 0 0

reserved:world

Allow Egress cidr:169.254.170.0/23 ANY NONE 0 0

reserved:world

Allow Egress cidr:169.254.168.0/24 ANY NONE 0 0

reserved:world

Allow Egress cidr:169.254.169.0/25 ANY NONE 0 0

reserved:world

Allow Egress cidr:169.254.169.128/26 ANY NONE 0 0

reserved:world

Allow Egress cidr:169.254.169.192/27 ANY NONE 0 0

reserved:world

Allow Egress cidr:169.254.169.224/28 ANY NONE 0 0

reserved:world

Allow Egress cidr:169.254.169.240/29 ANY NONE 0 0

reserved:world

Allow Egress cidr:169.254.169.248/30 ANY NONE 0 0

reserved:world

Allow Egress cidr:169.254.169.252/31 ANY NONE 0 0

reserved:world

Allow Egress cidr:169.254.169.255/32 ANY NONE 0 0

reserved:world

Allow Egress cidr:169.254.169.254/32 ANY NONE 0 0

reserved:world

Code language: Shell Session (shell)13: Enable debug mode for the cilium so that we can find out low level verbose

cilium config debug=true debugLB=true

# Enable debug mode for the hub endpoint

cilium endpoint config 3535 debug=trueCode language: Shell Session (shell)14: Monitor rejected packets for the hub

root@gke-test-cluster-1-default-pool-d0991fd4-c36l:/home/cilium# cilium monitor -v

CPU 01: MARK 0x394343c4 FROM 3535 DEBUG: Conntrack lookup 1/2: src=10.88.0.13:58282 dst=10.92.0.10:53

CPU 01: MARK 0x394343c4 FROM 3535 DEBUG: Conntrack lookup 2/2: nexthdr=17 flags=4

CPU 01: MARK 0x394343c4 FROM 3535 DEBUG: CT entry found lifetime=16813126, revnat=8

CPU 01: MARK 0x394343c4 FROM 3535 DEBUG: CT verdict: Reply, revnat=8

CPU 01: MARK 0x394343c4 FROM 3535 DEBUG: Conntrack lookup 1/2: src=10.88.0.13:58282 dst=10.88.0.2:53

CPU 01: MARK 0x394343c4 FROM 3535 DEBUG: Conntrack lookup 2/2: nexthdr=17 flags=1

CPU 01: MARK 0x394343c4 FROM 3535 DEBUG: CT verdict: New, revnat=0

CPU 01: MARK 0x394343c4 FROM 3535 DEBUG: Successfully mapped addr=10.88.0.2 to identity=47494

CPU 01: MARK 0x394343c4 FROM 3535 DEBUG: Policy evaluation would deny packet from 34776 to 47494

Policy verdict log: flow 0x394343c4 local EP ID 3535, remote ID 47494, proto 17, egress, action deny, match none, 10.88.0.13:58282 -> 10.88.0.2:53 udp

xx drop (Policy denied) flow 0x394343c4 to endpoint 0, file bpf_lxc.c line 1311, , identity 34776->47494: 10.88.0.13:58282 -> 10.88.0.2:53 udp

Code language: Shell Session (shell)Cilium monitor -v command generates series of messages, the above list includes selected subset. Cilium maps the DNS address to the identity 47494 and hub address to identity 34776. The packet is rejected because a rule does not exist in the hub endpoint 3535 to whitelist the identity 47494

15: Modify JupyterHub deployment to include additional egress policy for the DNS as described in my previous post so that you can validate applying an appropriate DNS policy will resolve the issue

16: Check the applied policy to the hub’s endpoint

root@gke-test-cluster-1-default-pool-d0991fd4-c36l:/home/cilium# cilium bpf policy get 3535

/sys/fs/bpf/tc/globals/cilium_policy_03535:

POLICY DIRECTION LABELS (source:key[=value]) PORT/PROTO PROXY PORT BYTES PACKETS

Allow Ingress reserved:host ANY NONE 197322 2357

Allow Ingress k8s:app=jupyterhub 8081/TCP NONE 0 0

k8s:component=proxy

k8s:hub.jupyter.org/network-access-hub=true

k8s:hub.jupyter.org/network-access-singleuser=true

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=testjupyterhubdev

k8s:io.cilium.k8s.namespace.labels.name=testjupyterhubdev

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=testjupyterhubdev

k8s:release=testjupyterhub

Allow Egress reserved:host 53/UDP NONE 0 0

Allow Egress reserved:host 53/TCP NONE 0 0

Allow Egress reserved:host ANY NONE 238182 1847

Allow Egress reserved:remote-node ANY NONE 0 0

Allow Egress reserved:remote-node 53/UDP NONE 0 0

Allow Egress reserved:remote-node 53/TCP NONE 0 0

Allow Egress reserved:kube-apiserver ANY NONE 0 0

reserved:remote-node

Allow Egress reserved:kube-apiserver 53/TCP NONE 0 0

reserved:remote-node

Allow Egress reserved:kube-apiserver 53/UDP NONE 0 0

reserved:remote-node

Allow Egress k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system 53/TCP NONE 0 0

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=glbc

k8s:name=glbc

Allow Egress k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system 53/UDP NONE 0 0

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=glbc

k8s:name=glbc

Allow Egress k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system 53/TCP NONE 0 0

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=konnectivity-agent

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=konnectivity-agent

Allow Egress k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system 53/UDP NONE 0 0

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=konnectivity-agent

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=konnectivity-agent

Allow Egress k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system 53/UDP NONE 0 0

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=kube-dns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns

Allow Egress k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system 53/TCP NONE 0 0

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=kube-dns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns

Allow Egress k8s:app=jupyterhub 8001/TCP NONE 7289 65

k8s:component=proxy

k8s:hub.jupyter.org/network-access-hub=true

k8s:hub.jupyter.org/network-access-singleuser=true