Jupyter is a well-known open-source project that develops and supports interactive computing products such as Jupyter notebook, JupyterLab and JupyterHub. These products are widely used by data science communities world wide. JupyterHub is a multi-user Jupyter notebook environment. It allows users to develop web-based interactive notebook documents without burdening them with installation and maintenance. JupyterHub can be installed on Kubernetes or a stand-alone machine. The community provides Zero to JupyterHub for Kubernetes distribution to setup on Kubernetes. The documentation includes details to install on Kubernetes on all major cloud providers. I recently tried to setup JupyterHub on GKE Autopilot with tube-dns and ran into an issue. This post describes the issue, steps to troubleshoot the issue and solution to resolve the issue

Prerequisites

- Basic understanding of Jupyter notebook / JupyterLab

- Understanding of containerized application architecture

- Basic understanding of Kubernetes

- Basic understanding of Helm charts

- Experience with GCP and GCP services such as GKE, Cloud logging, etc.

Issue description

JupyterHub documentation includes steps to setup an instance on GKE Standard cluster. However, the steps for GKE Autopilot do not officially exist. I found various issues related to JupyterHub setup on Autopilot and I included the fixes suggested by those discussions. I followed these steps to install,

Step 1: Create GKE Autopilot cluster

Step 2: Create config.yaml file with the following values

singleuser:

cloudMetadata:

blockWithIptables: false

hub:

readinessProbe:

initialDelaySeconds: 60Code language: YAML (yaml)These values are selected based on issues reported and discussed on the online forums

Step 3: Execute the Helm chart commands as per the documentation

helm upgrade --cleanup-on-fail \

--install testjupyterhub jupyterhub/jupyterhub \

--namespace testjupyterhubdev \

--create-namespace \

--version=3.0.0-beta.3 \

--values config.yamlCode language: Shell Session (shell)JupyterHub on GKE Autopilot did not install successfully. The hub pod remained in CrashLoopBackoff. The hub container showed an error api_request to the proxy failed with status code 599, retrying…

[W 2023-07-21 17:35:47.524 JupyterHub proxy:899] api_request to the proxy failed with status code 599, retrying...

[E 2023-07-21 17:35:47.525 JupyterHub app:3382]

Traceback (most recent call last):

File "/usr/local/lib/python3.11/site-packages/jupyterhub/app.py", line 3380, in launch_instance_async

await self.start()

File "/usr/local/lib/python3.11/site-packages/jupyterhub/app.py", line 3146, in start

await self.proxy.get_all_routes()

File "/usr/local/lib/python3.11/site-packages/jupyterhub/proxy.py", line 946, in get_all_routes

resp = await self.api_request('', client=client)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/jupyterhub/proxy.py", line 910, in api_request

result = await exponential_backoff(

^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/jupyterhub/utils.py", line 237, in exponential_backoff

raise asyncio.TimeoutError(fail_message)

TimeoutError: Repeated api_request to proxy path "" failed.

Code language: Shell Session (shell)Issue troubleshooting

I searched online but all the discussions indicated the config values that I had already included. The error message indicated a network related issue. However, it was not clear were to start. I opened an issue on zero-to-jupyterhub-k8s Github repo but the team closed it with the comment to search on forums for the similar issues. At this point, I had to try and investigate this issue myself. I followed the steps listed here to troubleshoot and find a solution

Step 1: Install on current version of GKE Standard

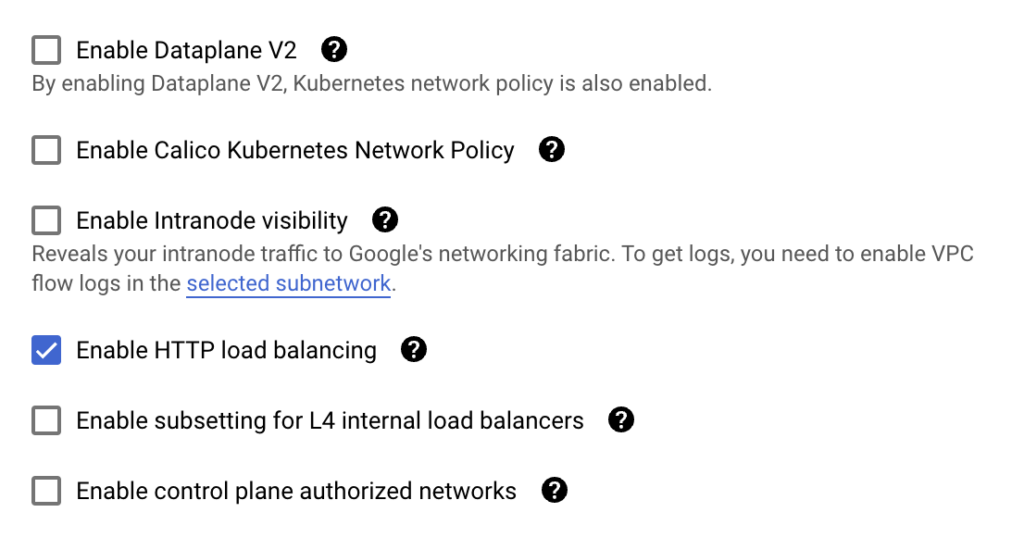

While creating GKE standard cluster, I checked the network settings. The screen shot below indicates the settings and their default values for a new cluster

I tested with Enable Dataplane V2 setting enabled and then with Enable Calico Kubernetes Network Policy setting enabled. The installation on GKE Standard was successful with the default settings and with Enable Calico Kubernetes Network Policy setting enabled. However, the installation failed with the same error when Enable Dataplane V2 was enabled

Step 2: Research on GKE Dataplane V2

GKE Dataplane V2 is an optimized dataplane for Kubernetes networking. It uses eBUF instead of iptables to implement the network policies. All GKE Autopilot clusters have Dataplane V2 enabled by default and that is the reason JupyterHub on GKE Autopilot consistently failed with the given error

Step 3: Check zero-to-jupyterhub-k8s network policies

I collected zero-to-jupyterhub-k8s network policies with a dry-run and debug options

helm upgrade --cleanup-on-fail \

--dry-run

--debug

--install testjupyterhub jupyterhub/jupyterhub \

--namespace testjupyterhubdev \

--create-namespace \

--version=3.0.0-beta.3 \

--values config.yamlCode language: Shell Session (shell)You can also collect the network policies after the installation on the GKE cluster

kubectl describe network policy hub -n testjupyterhubdev

kubectl describe network policy proxy -n testjupyterhubdev

kubectl describe network policy singleuser -n testjupyterhubdevCode language: Shell Session (shell)The core network policies created by JupyterHub installation are given below

# Source: jupyterhub/templates/hub/netpol.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: hub

labels:

component: hub

app: jupyterhub

release: testjupyterhub

chart: jupyterhub-3.0.0-beta.3

heritage: Helm

spec:

podSelector:

matchLabels:

component: hub

app: jupyterhub

release: testjupyterhub

policyTypes:

- Ingress

- Egress

# IMPORTANT:

# NetworkPolicy's ingress "from" and egress "to" rule specifications require

# great attention to detail. A quick summary is:

#

# 1. You can provide "from"/"to" rules that provide access either ports or a

# subset of ports.

# 2. You can for each "from"/"to" rule provide any number of

# "sources"/"destinations" of four different kinds.

# - podSelector - targets pods with a certain label in the same namespace as the NetworkPolicy

# - namespaceSelector - targets all pods running in namespaces with a certain label

# - namespaceSelector and podSelector - targets pods with a certain label running in namespaces with a certain label

# - ipBlock - targets network traffic from/to a set of IP address ranges

#

# Read more at: https://kubernetes.io/docs/concepts/services-networking/network-policies/#behavior-of-to-and-from-selectors

#

ingress:

# allowed pods (hub.jupyter.org/network-access-hub) --> hub

- ports:

- port: http

from:

# source 1 - labeled pods

- podSelector:

matchLabels:

hub.jupyter.org/network-access-hub: "true"

egress:

# hub --> proxy

- to:

- podSelector:

matchLabels:

component: proxy

app: jupyterhub

release: testjupyterhub

ports:

- port: 8001

# hub --> singleuser-server

- to:

- podSelector:

matchLabels:

component: singleuser-server

app: jupyterhub

release: testjupyterhub

ports:

- port: 8888

# Allow outbound connections to the DNS port in the private IP ranges

- ports:

- protocol: UDP

port: 53

- protocol: TCP

port: 53

to:

- ipBlock:

cidr: 10.0.0.0/8

- ipBlock:

cidr: 172.16.0.0/12

- ipBlock:

cidr: 192.168.0.0/16

# Allow outbound connections to non-private IP ranges

- to:

- ipBlock:

cidr: 0.0.0.0/0

except:

# As part of this rule, don't:

# - allow outbound connections to private IP

- 10.0.0.0/8

- 172.16.0.0/12

- 192.168.0.0/16

# - allow outbound connections to the cloud metadata server

- 169.254.169.254/32

# Allow outbound connections to private IP ranges

- to:

- ipBlock:

cidr: 10.0.0.0/8

- ipBlock:

cidr: 172.16.0.0/12

- ipBlock:

cidr: 192.168.0.0/16

# Allow outbound connections to the cloud metadata server

- to:

- ipBlock:

cidr: 169.254.169.254/32

---

# Source: jupyterhub/templates/proxy/netpol.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: proxy

labels:

component: proxy

app: jupyterhub

release: testjupyterhub

chart: jupyterhub-3.0.0-beta.3

heritage: Helm

spec:

podSelector:

matchLabels:

component: proxy

app: jupyterhub

release: testjupyterhub

policyTypes:

- Ingress

- Egress

# IMPORTANT:

# NetworkPolicy's ingress "from" and egress "to" rule specifications require

# great attention to detail. A quick summary is:

#

# 1. You can provide "from"/"to" rules that provide access either ports or a

# subset of ports.

# 2. You can for each "from"/"to" rule provide any number of

# "sources"/"destinations" of four different kinds.

# - podSelector - targets pods with a certain label in the same namespace as the NetworkPolicy

# - namespaceSelector - targets all pods running in namespaces with a certain label

# - namespaceSelector and podSelector - targets pods with a certain label running in namespaces with a certain label

# - ipBlock - targets network traffic from/to a set of IP address ranges

#

# Read more at: https://kubernetes.io/docs/concepts/services-networking/network-policies/#behavior-of-to-and-from-selectors

#

ingress:

# allow incoming traffic to these ports independent of source

- ports:

- port: http

- port: https

# allowed pods (hub.jupyter.org/network-access-proxy-http) --> proxy (http/https port)

- ports:

- port: http

from:

# source 1 - labeled pods

- podSelector:

matchLabels:

hub.jupyter.org/network-access-proxy-http: "true"

# allowed pods (hub.jupyter.org/network-access-proxy-api) --> proxy (api port)

- ports:

- port: api

from:

# source 1 - labeled pods

- podSelector:

matchLabels:

hub.jupyter.org/network-access-proxy-api: "true"

egress:

# proxy --> hub

- to:

- podSelector:

matchLabels:

component: hub

app: jupyterhub

release: testjupyterhub

ports:

- port: 8081

# proxy --> singleuser-server

- to:

- podSelector:

matchLabels:

component: singleuser-server

app: jupyterhub

release: testjupyterhub

ports:

- port: 8888

# Allow outbound connections to the DNS port in the private IP ranges

- ports:

- protocol: UDP

port: 53

- protocol: TCP

port: 53

to:

- ipBlock:

cidr: 10.0.0.0/8

- ipBlock:

cidr: 172.16.0.0/12

- ipBlock:

cidr: 192.168.0.0/16

# Allow outbound connections to non-private IP ranges

- to:

- ipBlock:

cidr: 0.0.0.0/0

except:

# As part of this rule, don't:

# - allow outbound connections to private IP

- 10.0.0.0/8

- 172.16.0.0/12

- 192.168.0.0/16

# - allow outbound connections to the cloud metadata server

- 169.254.169.254/32

# Allow outbound connections to private IP ranges

- to:

- ipBlock:

cidr: 10.0.0.0/8

- ipBlock:

cidr: 172.16.0.0/12

- ipBlock:

cidr: 192.168.0.0/16

# Allow outbound connections to the cloud metadata server

- to:

- ipBlock:

cidr: 169.254.169.254/32

---

# Source: jupyterhub/templates/singleuser/netpol.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: singleuser

labels:

component: singleuser

app: jupyterhub

release: testjupyterhub

chart: jupyterhub-3.0.0-beta.3

heritage: Helm

spec:

podSelector:

matchLabels:

component: singleuser-server

app: jupyterhub

release: testjupyterhub

policyTypes:

- Ingress

- Egress

# IMPORTANT:

# NetworkPolicy's ingress "from" and egress "to" rule specifications require

# great attention to detail. A quick summary is:

#

# 1. You can provide "from"/"to" rules that provide access either ports or a

# subset of ports.

# 2. You can for each "from"/"to" rule provide any number of

# "sources"/"destinations" of four different kinds.

# - podSelector - targets pods with a certain label in the same namespace as the NetworkPolicy

# - namespaceSelector - targets all pods running in namespaces with a certain label

# - namespaceSelector and podSelector - targets pods with a certain label running in namespaces with a certain label

# - ipBlock - targets network traffic from/to a set of IP address ranges

#

# Read more at: https://kubernetes.io/docs/concepts/services-networking/network-policies/#behavior-of-to-and-from-selectors

#

ingress:

# allowed pods (hub.jupyter.org/network-access-singleuser) --> singleuser-server

- ports:

- port: notebook-port

from:

# source 1 - labeled pods

- podSelector:

matchLabels:

hub.jupyter.org/network-access-singleuser: "true"

egress:

# singleuser-server --> hub

- to:

- podSelector:

matchLabels:

component: hub

app: jupyterhub

release: testjupyterhub

ports:

- port: 8081

# singleuser-server --> proxy

# singleuser-server --> autohttps

#

# While not critical for core functionality, a user or library code may rely

# on communicating with the proxy or autohttps pods via a k8s Service it can

# detected from well known environment variables.

#

- to:

- podSelector:

matchLabels:

component: proxy

app: jupyterhub

release: testjupyterhub

ports:

- port: 8000

- to:

- podSelector:

matchLabels:

component: autohttps

app: jupyterhub

release: testjupyterhub

ports:

- port: 8080

- port: 8443

# Allow outbound connections to the DNS port in the private IP ranges

- ports:

- protocol: UDP

port: 53

- protocol: TCP

port: 53

to:

- ipBlock:

cidr: 10.0.0.0/8

- ipBlock:

cidr: 172.16.0.0/12

- ipBlock:

cidr: 192.168.0.0/16

# Allow outbound connections to non-private IP ranges

- to:

- ipBlock:

cidr: 0.0.0.0/0

except:

# As part of this rule, don't:

# - allow outbound connections to private IP

- 10.0.0.0/8

- 172.16.0.0/12

- 192.168.0.0/16

# - allow outbound connections to the cloud metadata server

- 169.254.169.254/32Code language: YAML (yaml)Step 4: Check network logs for JupyterHub on GKE Autopilot

To check the GKE Autopilot network level logs, I enabled the logging with the following command

kubectl edit networklogging defaultCode language: Shell Session (shell)Set log value to true. The default value for log is false

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: networking.gke.io/v1alpha1

kind: NetworkLogging

metadata:

annotations:

components.gke.io/component-name: advanceddatapath

components.gke.io/component-version: 26.1.27

components.gke.io/layer: addon

creationTimestamp: "2023-07-21T21:37:19Z"

generation: 2

labels:

addonmanager.kubernetes.io/mode: EnsureExists

name: default

resourceVersion: "4226"

uid: 502866c8-a065-4b44-b0ab-1b65b8a5ca5e

spec:

cluster:

allow:

delegate: false

log: true

deny:

delegate: false

log: trueCode language: Shell Session (shell)Go to the cloud logging and query the denied network requests in your cluster

resource.type="k8s_node"

resource.labels.location="us-central1"

resource.labels.cluster_name="test-cluster-1"

logName="projects/my-project/logs/policy-action"

jsonPayload.disposition="deny"Code language: Shell Session (shell)I collected the following log entry from this query

[

{

"insertId": "gbko6hipkcfdidfg",

"jsonPayload": {

"dest": {

"namespace": "kube-system",

"pod_namespace": "kube-system",

"workload_kind": "DaemonSet",

"pod_name": "node-local-dns-svv4s",

"workload_name": "node-local-dns"

},

"count": 4,

"node_name": "gk3-test-cluster-1-pool-1-a0938fbf-bn9k",

"connection": {

"direction": "egress",

"protocol": "udp",

"src_ip": "10.114.1.80",

"dest_ip": "10.114.1.72",

"dest_port": 53,

"src_port": 56625

},

"disposition": "deny",

"src": {

"workload_kind": "Deployment",

"workload_name": "hub",

"pod_name": "hub-74bb745848-vf8lh",

"namespace": "testjupyterhubdev",

"pod_namespace": "testjupyterhubdev"

}

},

"resource": {

"type": "k8s_node",

"labels": {

"cluster_name": "test-cluster-1",

"project_id": "my-project",

"location": "us-central1",

"node_name": "gk3-test-cluster-1-pool-1-a0938fbf-bn9k"

}

},

"timestamp": "2023-07-21T22:02:35.678145877Z",

"logName": "projects/my-project/logs/policy-action",

"receiveTimestamp": "2023-07-21T22:02:44.859820732Z"

}

]Code language: Shell Session (shell)The log entry clearly indicates that the hub pod is unable to connect to DNS in the cluster on port 53

A solution to the issue

The zero-to-jupyterhub-k8s core network policies allow egress to the IP range 10.0.0.0/8 on port 53 but the network log shows that hub pod is unable to connect to node-local-dns pod IP 10.114.1.72. The reason for this behavior is Dataplane V2 implementation. I added the egress entries for hub, proxy and singleuser pods so that the egress on port 53 is allowed only inside the cluster

singleuser:

cloudMetadata:

blockWithIptables: false

networkPolicy:

egress:

- to:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: kube-system

ports:

- protocol: UDP

port: 53

- protocol: TCP

port: 53

proxy:

chp:

networkPolicy:

egress:

- to:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: kube-system

ports:

- protocol: UDP

port: 53

- protocol: TCP

port: 53

hub:

readinessProbe:

initialDelaySeconds: 60

networkPolicy:

egress:

- to:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: kube-system

ports:

- protocol: UDP

port: 53

- protocol: TCP

port: 53Code language: YAML (yaml)GKE Autopilot with Cloud DNS

GKE Autopilot is being migrated from kube-dns to Cloud DNS. You will only need the following config values to install JupyterHub on GKE Autopilot with Cloud DNS

singleuser:

cloudMetadata:

blockWithIptables: false

networkPolicy:

egress:

# Allow outbound connections to the cloud metadata server

- to:

- ipBlock:

cidr: 169.254.169.254/32Code language: YAML (yaml)