Artificial intelligence is now part of our everyday tools and technology. Ability of the machines to learn and reason like human is groundbreaking. At the heart of the machine intelligence is the AI models. GPU (Graphics Processing Unit) is the main component to process large amount of data and build AI models. Jupyter Notebook is widely used to collect data, cleanse the data and develop the models. It is important that the Jupyter Notebook session is GPU enabled to be able to effectively and efficiently process and build AI models. I have been working with JupyterHub and posted about setting it up on GKE. When I started working on AI model development using JupyterHub, I was not able to use GPU with my JupyterHub instance. This post provides the steps and details for GPU enabled JupyterHub on GKE Autopilot to process large data and develop AI models

Prerequisites

- Basic understanding of Jupyter notebook / JupyterLab

- Understanding of containerized application architecture

- Basic understanding of Kubernetes

- Basic understanding of Helm charts

- Experience with JupyterHub setup on GKE using zero-to-jupyterhub Helm chart

- Basic understanding of GPU and its role in the field of AI

Issues with enabling GPU in JupyterHub

You can easily request GPU resources for your workload on GKE Autopilot by adding few fields in the pod specification. Based on that, I added following changes to my zero-to-jupyterhub helm chart configuration

1. Create user profiles

singleuser:

profileList:

- display_name: "Default environment"

description: "Minimal notebook environment for users."

default: true

- display_name: "GPU environment"

description: "GPU enabled notebook environment for users."Code language: PHP (php)Create two user profiles so that user can select non-GPU vs GPU session, first default environment that does not enable GPU and the second environment that enables GPU

2. Add GPU specific JupyterHub container image

singleuser:

profileList:

- display_name: "Default environment"

description: "Minimal notebook environment for users."

default: true

- display_name: "GPU environment"

description: "GPU enabled notebook environment for users."

kubespawner_override:

image: quay.io/jupyter/pytorch-notebook:cuda12-python-3.11.8

Code language: PHP (php)I wanted to use pytorch libraries so I selected pytorch notebook container image with CUDA, based on jupyter docker stack image selection guidelines

3. Add GPU specific fields

singleuser:

profileList:

- display_name: "Default environment"

description: "Minimal notebook environment for users."

default: true

- display_name: "GPU environment"

description: "GPU enabled notebook environment for users."

kubespawner_override:

image: quay.io/jupyter/pytorch-notebook:cuda12-python-3.11.8

node_selector:

cloud.google.com/gke-accelerator: nvidia-tesla-t4

cloud.google.com/gke-accelerator-count: 1

extra_resource_limits:

nvidia.com/gpu: 1

Code language: PHP (php)I wanted to use NVIDIA tesla t4 GPU so I selected appropriate accelerator, accelerator count and nvidia gpu count

When I started GPU environment user session with this configuration, user session pod was launched on the requested GPU node on GKE Autopilot. However, allocated GPU was not accessible / available in the jupyter session. I followed the steps here to verify

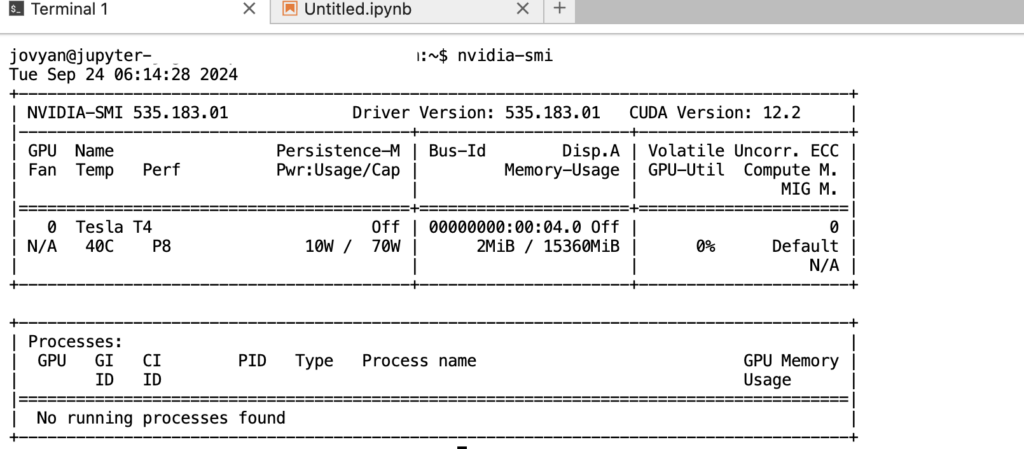

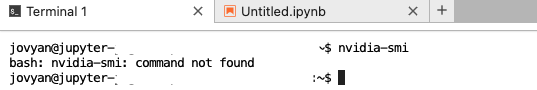

1. Check GPU state and monitor GPU

A container with CUDA includes nvidia-smi utility to check NVIDIA GPU state and monitor the GPU. I launched nvidia-smi command on the JupyterHub session terminal which resulted into the ‘command not found’ error

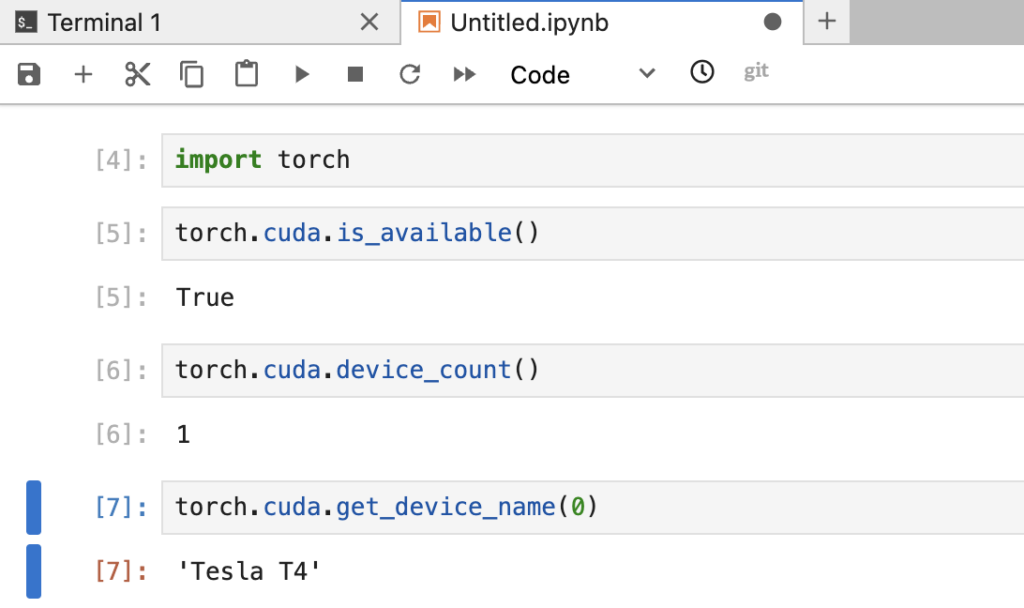

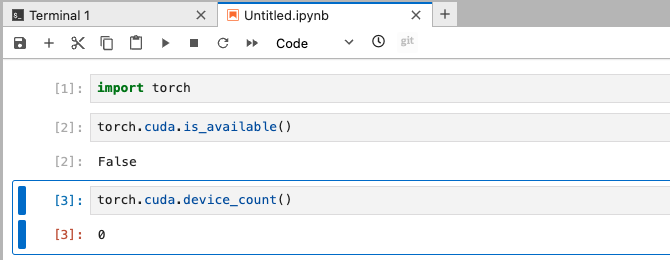

2. Check CUDA availability

Pytorch library has module to check the availability of CUDA. When you launch JupyterHub session with pytorch library, the module will check CUDA availability on your container image and its ability to access GPU. The pytorch module did not find CUDA on the configured container image

Enable GPU on JupyterHub

Based on the above tests, it was clear that CUDA enabled JupyterHub image from the JupyterHub community was missing NVIDIA GPU drivers and CUDA. I extended the container image and manually installed GPU drivers and CUDA. However, I was still unable to access GPU in my JupyterHub session. I finally ended up creating custom JupyterHub image that was based on NVIDIA CUDA runtime container image. I followed the steps given below for GPU enabled JupyterHub on GKE Autopilot. My target JupyterHub image was the one with pytorch enabled with CUDA

1. Get JupyterHub docker stacks

I cloned the JupyterHub docker stacks repository. The repo has Dockerfiles and configuration to build JupyterHub container images

2. Create a Github Actions workflow

I created a workflow to build custom Jupyterhub image with CUDA enabled pytorch. JupyterHub container image relationships indicates the images to custom build target pytorch image

name: Publish CUDA enabled JupyterHub image

on:

workflow_dispatch

jobs:

build_and_publish:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

with:

repository: 'jupyter/docker-stacks'

- name: Set up QEMU

uses: docker/setup-qemu-action@v3

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v3

- name: Login to GitHub Container Registry

uses: docker/login-action@v3

with:

registry: ghcr.io

username: ${{ github.repository_owner }}

password: ${{ secrets.GITHUB_TOKEN }}

- name: Build and push Docker stacks foundation image

uses: docker/build-push-action@v6

env:

ROOT_CONTAINER: 'nvidia/cuda:12.4.1-runtime-ubuntu22.04'

with:

context: ./images/docker-stacks-foundation

file: ./images/docker-stacks-foundation/Dockerfile

tags: ghcr.io/vizeit/jupyterhub/docker-stacks-foundation

push: true

build-args: |

ROOT_CONTAINER=${{ env.ROOT_CONTAINER }}

- name: Build and push base notebook image

uses: docker/build-push-action@v6

env:

BASE_CONTAINER: 'ghcr.io/vizeit/jupyterhub/docker-stacks-foundation'

with:

context: ./images/base-notebook

file: ./images/base-notebook/Dockerfile

tags: ghcr.io/vizeit/jupyterhub/base-notebook

push: true

build-args: |

BASE_CONTAINER=${{ env.BASE_CONTAINER }}

- name: Build and push minimal notebook image

uses: docker/build-push-action@v6

env:

BASE_CONTAINER: 'ghcr.io/vizeit/jupyterhub/base-notebook'

with:

context: ./images/minimal-notebook

file: ./images/minimal-notebook/Dockerfile

tags: ghcr.io/vizeit/jupyterhub/minimal-notebook

push: true

build-args: |

BASE_CONTAINER=${{ env.BASE_CONTAINER }}

- name: Build and push scipy notebook image

uses: docker/build-push-action@v6

env:

BASE_CONTAINER: 'ghcr.io/vizeit/jupyterhub/minimal-notebook'

with:

context: ./images/scipy-notebook

file: ./images/scipy-notebook/Dockerfile

tags: ghcr.io/vizeit/jupyterhub/scipy-notebook

push: true

build-args: |

BASE_CONTAINER=${{ env.BASE_CONTAINER }}

- name: Build and push pytorch notebook image

uses: docker/build-push-action@v6

env:

BASE_CONTAINER: 'ghcr.io/vizeit/jupyterhub/scipy-notebook'

with:

context: ./images/pytorch-notebook

file: ./images/pytorch-notebook/cuda12/Dockerfile

tags: ghcr.io/vizeit/jupyterhub/pytorch-notebook:custom-CUDA-1.0.0

push: true

build-args: |

BASE_CONTAINER=${{ env.BASE_CONTAINER }}

Code language: JavaScript (javascript)As highlighted in the script above, the foundation image uses custom NVIDIA CUDA runtime image. Also, the script here pushes the final custom image to ghcr but you may need to push it to a cloud registry (GCP Artifact registry) to be able to pull and use it in your GKE instance. This example pushes all the images to ghcr due to space limitations on the free Github runners. If you have access to enterprise Github runner, you can use local docker registry for all the images except final pytorch image.

name: Publish CUDA enabled JupyterHub image

on:

workflow_dispatch

jobs:

build_and_publish:

runs-on: ubuntu-latest

services:

registry:

image: registry:latest

ports:

- 5000:5000

steps:

- uses: actions/checkout@v4

with:

repository: 'jupyter/docker-stacks'

- name: Set up QEMU

uses: docker/setup-qemu-action@v3

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v3

with:

driver-opts: network=host

- name: Build and push Docker stacks foundation image

uses: docker/build-push-action@v6

env:

ROOT_CONTAINER: 'nvidia/cuda:12.4.1-runtime-ubuntu22.04'

with:

context: ./images/docker-stacks-foundation

file: ./images/docker-stacks-foundation/Dockerfile

tags: localhost:5000/jupyterhub/docker-stacks-foundation

push: true

build-args: |

ROOT_CONTAINER=${{ env.ROOT_CONTAINER }}

- name: Build and push base notebook image

uses: docker/build-push-action@v6

env:

BASE_CONTAINER: 'localhost:5000/jupyterhub/docker-stacks-foundation'

with:

context: ./images/base-notebook

file: ./images/base-notebook/Dockerfile

tags: localhost:5000/jupyterhub/base-notebook

push: true

build-args: |

BASE_CONTAINER=${{ env.BASE_CONTAINER }}

- name: Build and push minimal notebook image

uses: docker/build-push-action@v6

env:

BASE_CONTAINER: 'localhost:5000/jupyterhub/base-notebook'

with:

context: ./images/minimal-notebook

file: ./images/minimal-notebook/Dockerfile

tags: localhost:5000/jupyterhub/minimal-notebook

push: true

build-args: |

BASE_CONTAINER=${{ env.BASE_CONTAINER }}

- name: Build and push scipy notebook image

uses: docker/build-push-action@v6

env:

BASE_CONTAINER: 'localhost:5000/jupyterhub/minimal-notebook'

with:

context: ./images/scipy-notebook

file: ./images/scipy-notebook/Dockerfile

tags: localhost:5000/jupyterhub/scipy-notebook

push: true

build-args: |

BASE_CONTAINER=${{ env.BASE_CONTAINER }}

- name: Build and push pytorch notebook image

uses: docker/build-push-action@v6

env:

BASE_CONTAINER: 'localhost:5000/jupyterhub/scipy-notebook'

with:

context: ./images/pytorch-notebook

file: ./images/pytorch-notebook/cuda12/Dockerfile

tags: ghcr.io/vizeit/jupyterhub/pytorch-notebook:custom-CUDA-1.0.0

push: true

build-args: |

BASE_CONTAINER=${{ env.BASE_CONTAINER }}

Code language: JavaScript (javascript)3. Use custom image in the zero-to-jupyterhub helm chart configuration

singleuser:

profileList:

- display_name: "Default environment"

description: "Minimal notebook environment for users."

default: true

- display_name: "GPU environment"

description: "GPU enabled notebook environment for users."

kubespawner_override:

image: us-central1-docker.pkg.dev/my-project/jupyter/pytorch-notebook:custom-CUDA-1.0.0

node_selector:

cloud.google.com/gke-accelerator: nvidia-tesla-t4

cloud.google.com/gke-accelerator-count: 1

extra_resource_limits:

nvidia.com/gpu: 1

Code language: PHP (php)Now, when you launch a JupyterHub session selecting GPU environment, the check for GPU and CUDA availability should pass