While working on a project, I came across a use case to bulk delete documents in Azure Cosmos DB Container using Azure Data Factory (ADF). There are few approaches, however it mainly involves either implementing a stored procedure or a function. This article provides the steps to implement bulk delete of documents in Cosmos DB entirely in a ADF flow.

Problem statement

Azure Cosmos DB SQL API has a large quantity of documents. The Azure Data Factory pipeline requires to bulk delete documents before loading new set of documents

Step 1: Prerequisites

- Access to Azure Cloud

- A data source, either a csv file or excel file with the data

- A data sink, Cosmos DB SQL API instance

- ADF pipeline that extracts the source, transforms the data from the source and loads into Cosmos DB sink

Step 2: Add a dataflow to existing pipeline to bulk delete documents

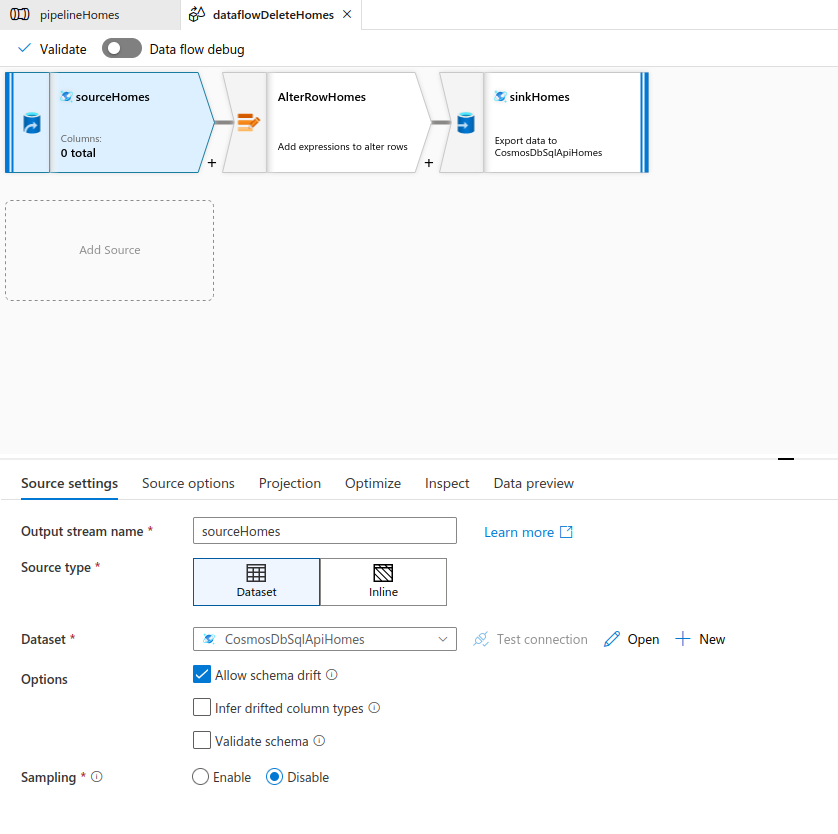

i. Add source pointing to the Cosmos DB container that has records to be deleted

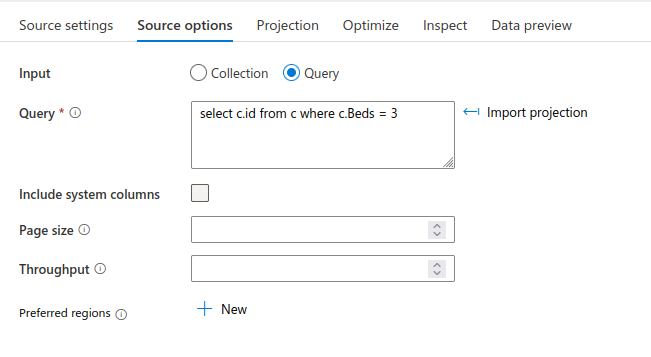

ii. Go to the ‘Source options’ for the source and select ‘Query’ so you can enter the SQL query that will retrieve the documents to delete. In the example here, I am selecting all 3 bedroom home listing so those can be deleted from Cosmos DB

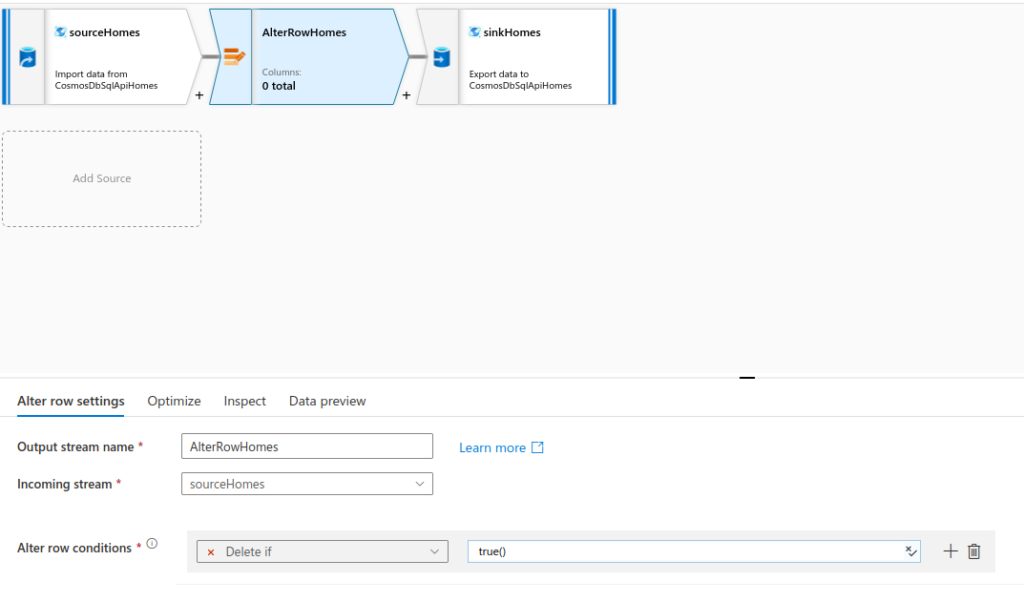

iii. Add ‘Alter Row’ transformation to the source and go to ‘Alter row settings’. Select ‘Delete if’ under ‘Alter row conditions’ and enter ‘true()’ in the expression

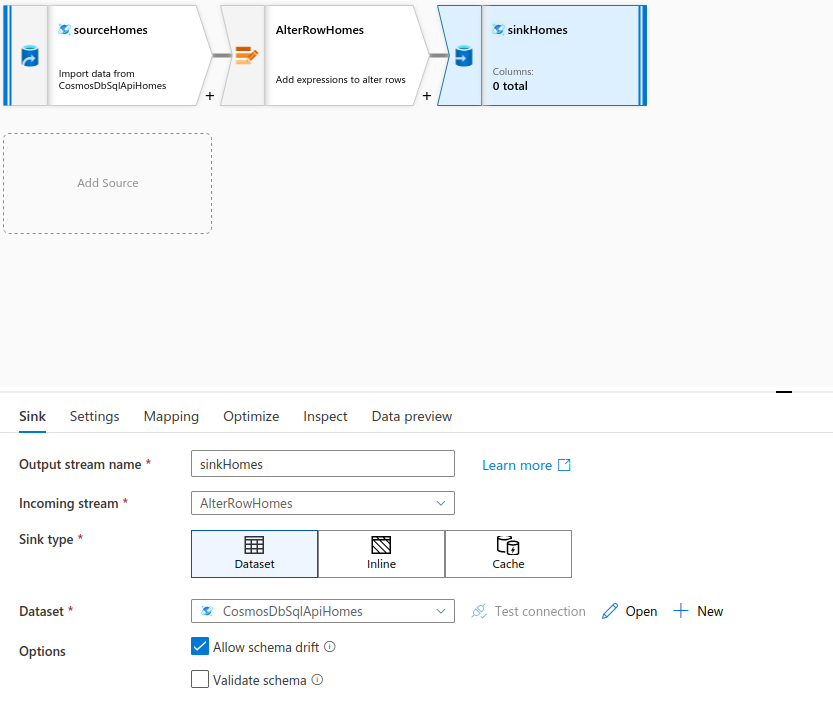

iv. Add sink pointing to the same Cosmos DB container

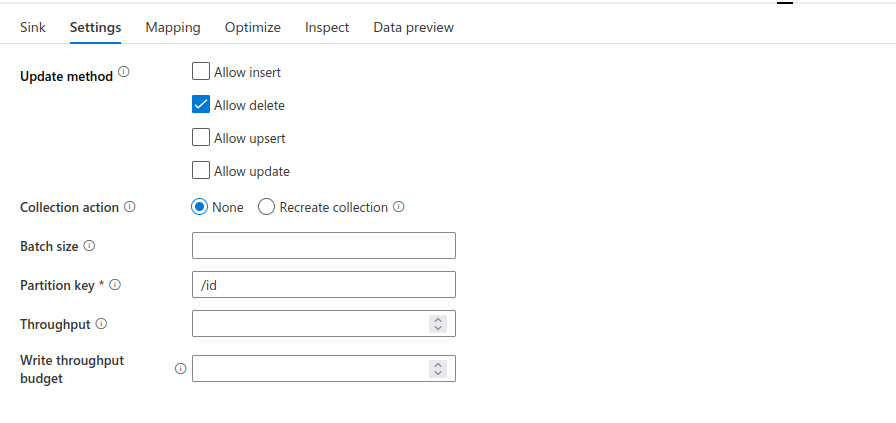

v. Go to sink settings and select ‘Allow delete’ under ‘Update method’. If Cosmos DB has a large set of documents to delete, provide ‘Batch size’ to split the deletion

To bulk delete documents in Azure Cosmos DB, Azure Data Factory provides easier approach because the flow is entirely in the data factory and it does not call external stored procedures or functions. This also allows better control over the data flow and provides better troubleshooting mechanism in case of any errors