Apache Airflow supports multiple database backends; PostgreSQL, MySQL and SQLite. However, SQLite is only recommended for the development purposes due to many limitations. That leaves PostgreSQL and MySQL as two options for Apache Airflow database backend for a production environment. When I started working on a framework using Apache Airflow, I chose MySQL as a backend database. However, I recently ran into an issue when workload started increasing. Airflow community recommended to migrate to PostgreSQL to avoid such issues. Before migrating the database, I wanted to compare both the databases with an Apache Airflow instance having a production like environment and workload. This comparison was important to ensure that database backend is also part of Apache Airflow Optimizations. This post compares Apache Airflow Database Backend MySQL vs PostgreSQL by performing extensive load tests on an instance setup on Kubernetes.

Prerequisites

- Experience using Apache Airflow on kubernetes engine

- Experience building workflows using Apache Airflow

- Basic understanding of Database Backends

Apache Airflow instance important configuration and settings

| Airflow container image tag | 2.9.2-python3.10 |

| Executor | CeleryKubernetesExecutor |

| Celery workers replicas | 4 |

| Celery workers autoscaling enabled | true |

| Celery workers autoscaling max replicas | 8 |

| Celery workers memory | 30Gi |

| Celery workers cpu | 5 |

| Scheduler replicas | 2 |

| Scheduler memory | 30Gi |

| Scheduler cpu | 5 |

| AIRFLOW__CORE__MAX_ACTIVE_RUNS_PER_DAG | 8 |

| AIRFLOW__CORE__MAX_ACTIVE_TASKS_PER_DAG | 96 |

| AIRFLOW__CORE__PARALLELISM | 160 |

| AIRFLOW__SCHEDULER__MAX_TIS_PER_QUERY | 0 |

| AIRFLOW__CELERY__WORKER_CONCURRENCY | 40 |

| pgbouncer enabled (for PostgreSQL) | true |

| pgbouncer memory (for PostgreSQL) | 30Gi |

| pgbouncer cpu (for PostgreSQL) | 5 |

| MySQL / PostgreSQL memory | 4GB |

| MySQL / PostgreSQL cpu | 1 |

Apache Airflow DAG to perform the load test

I created two DAGs to perform the load test. First, a DAG with one dynamic task mapping that expands into number of tasks at run time based on the input parameter. Second, a DAG with multiple dynamic task mapping under a dynamic task mapping group that expands into number of tasks at run time based on the input parameter

"""DAG file to load test with Dynamic task mapping"""

from datetime import datetime

from airflow.decorators import task

from airflow.models.dag import dag

@task

def make_list(no_task: str):

return [i for i in range(int(no_task if no_task else 100))]

@task

def consumer(arg):

print(arg)

with DAG(

dag_id="dynamic-map", start_date=datetime(2024, 06, 01), schedule_interval=None

) as dag:

no_of_tasks = "{{ dag_run.conf['no_of_tasks'] }}"

consumer.expand(arg=make_list(no_of_tasks))Code language: Python (python)"""DAG file to load test with Dynamic task mapping group"""

from datetime import datetime

from airflow.decorators import task

from airflow.models.dag import dag

@task

def make_list(no_task: str):

return [i for i in range(int(no_task if no_task else 100))]

@task

def consumer(arg):

print(arg)

@task

def agent(arg):

print(arg)

@task

def company(arg):

print(arg)

@task_group

def supplier(arg):

consumer(arg)

agent(arg)

company(arg)

with DAG(

dag_id="dynamic-map-group", start_date=datetime(2024, 06, 01), schedule_interval=None

) as dag:

no_of_tasks = "{{ dag_run.conf['no_of_tasks'] }}"

supplier.expand(arg=make_list(no_of_tasks))Code language: JavaScript (javascript)I wrote a simple python script to submit multiple dagruns for these two DAGs with increasing value of ‘no_of_tasks’ input parameter for the dagruns. This was done first for MySQL database backend and then for PostgreSQL

from requests.auth import HTTPBasicAuth

from json import dumps

from requests import post

for i in range(75, 100):

run = {

"no_of_tasks": str(i * 4)

}

post("https://k8airflowwebserverdomainname/api/v1/dags/dynamic-map/dagRuns",

data=dumps({"conf": run}),

headers={"content-type": "application/json"}

auth=HTTPBasicAuth(username="user", password="password"))

post("https://k8airflowwebserverdomainname/api/v1/dags/dynamic-map-group/dagRuns",

data=dumps({"conf": run}),

headers={"content-type": "application/json"}

auth=HTTPBasicAuth(username="user", password="password"))Code language: JavaScript (javascript)Comparison between MySQL and PostgreSQL database backend

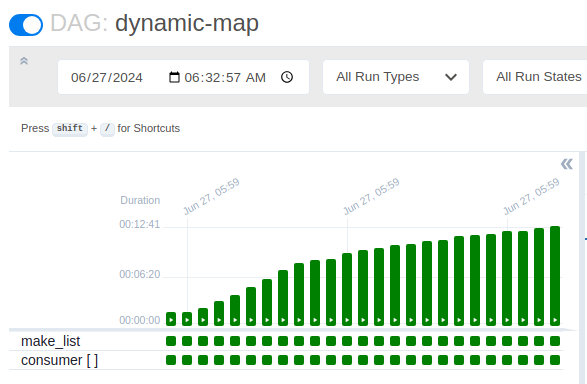

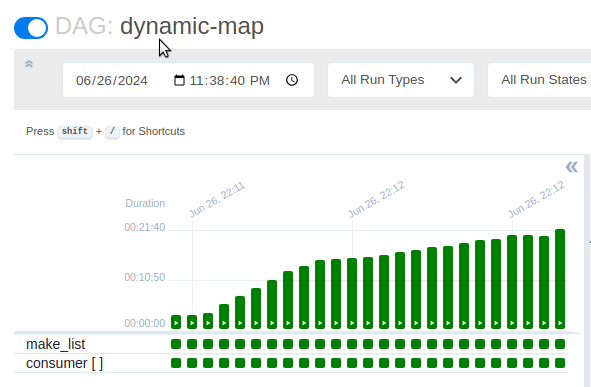

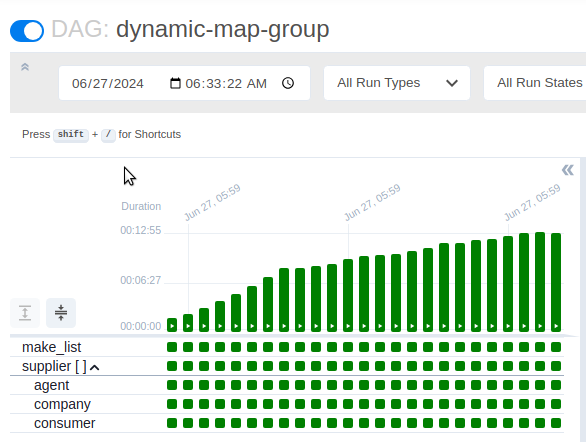

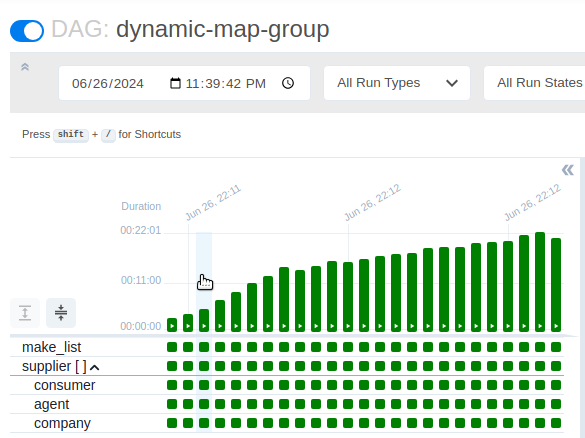

Apache Airflow DAG runs

Execution times for the MySQL and PostgreSQL database backends were drastically different. PostgreSQL database backend performed slower than MySQL database backend

Database metrics

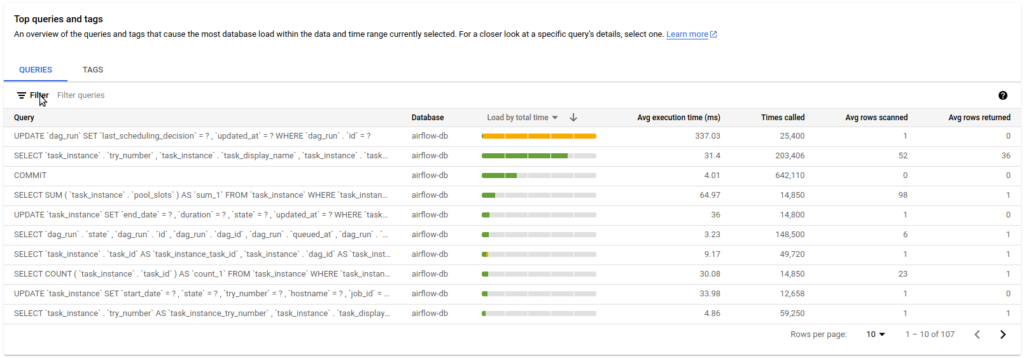

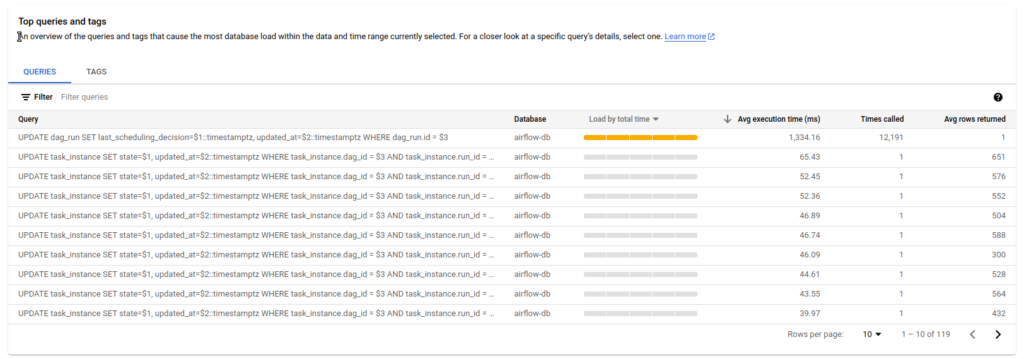

Top queries causing the most database load

Database updates (writes) with PostgreSQL were significantly slower compared to MySQL

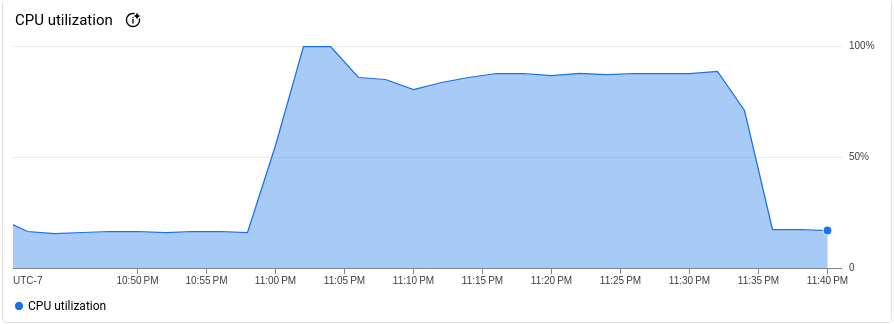

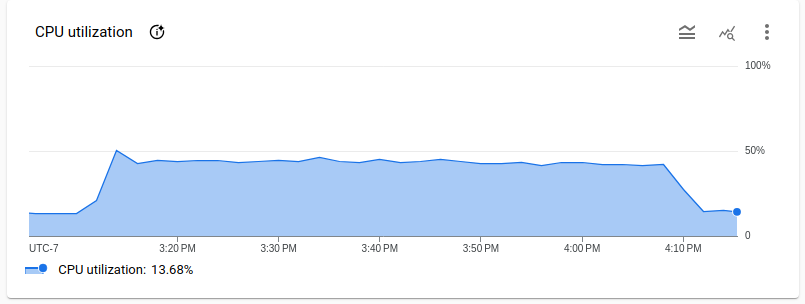

CPU

MySQL database consumed more CPU compared to PostgreSQL in my test. However, I did not notice any impact of that on the database performance during the load test

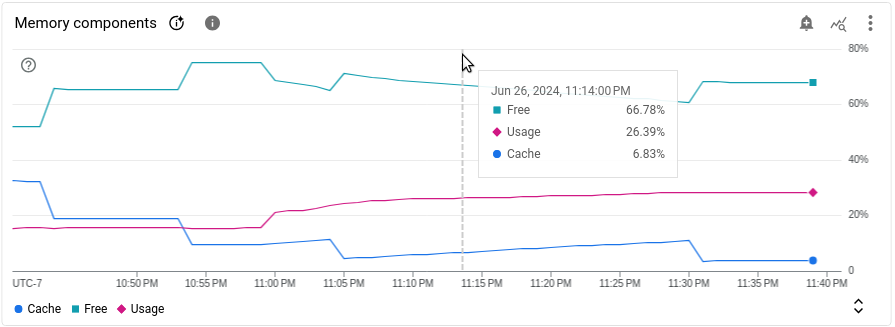

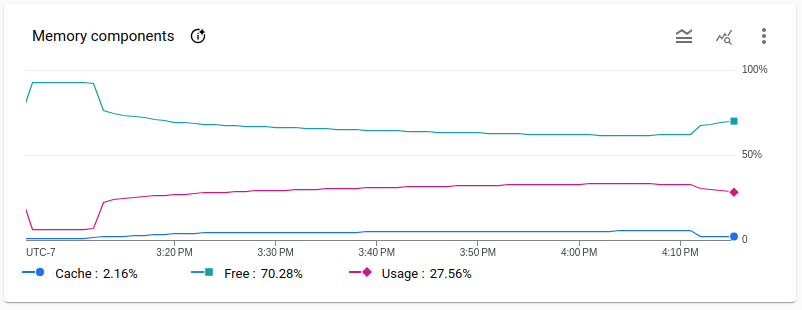

Memory

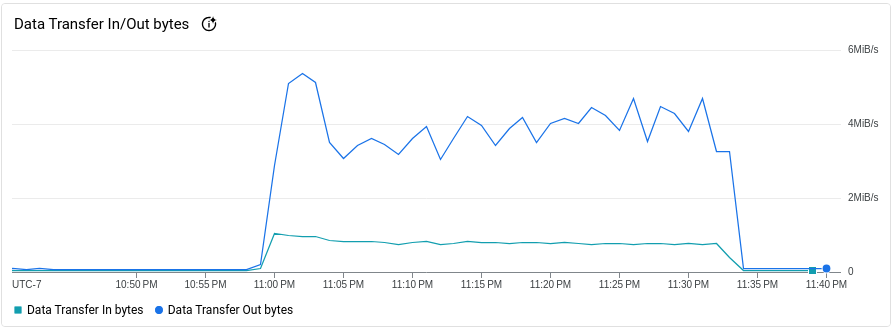

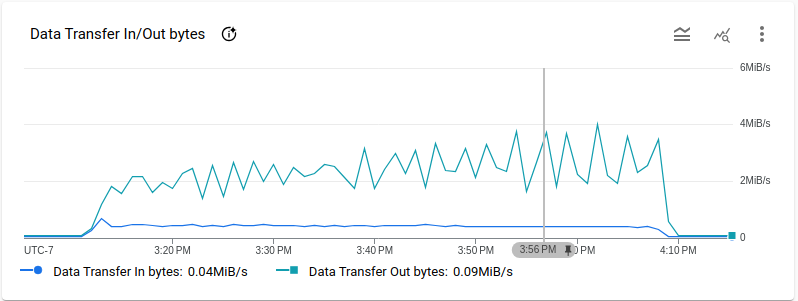

Data transfer

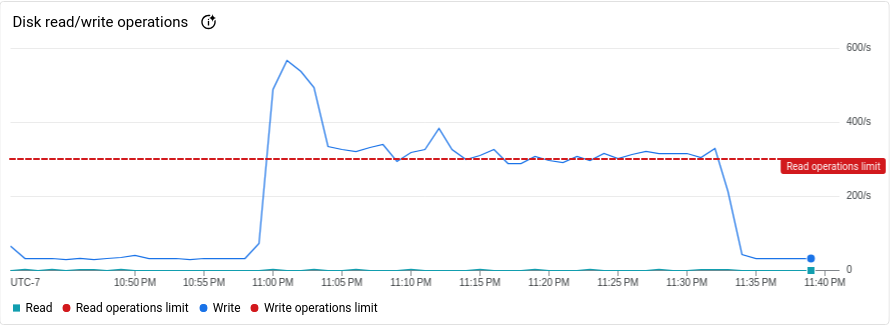

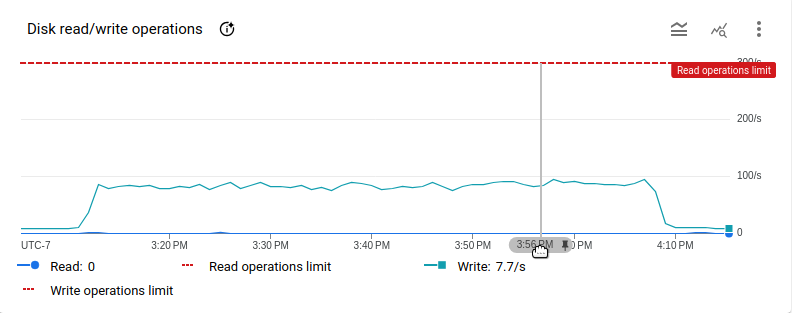

Disk read/write

Disk read/write metric shows that PostgreSQL write operations were slower than MySQL

Observations

Both the database backends were stable in the load test. PostgreSQL performed slower compared to MySQL. PostgreSQL setup also required additional pod for pgbouncer to manage the database connection pool which is an additional running/fixed cost compared to MySQL